Advance quickly and successfully during your IT modernization journey

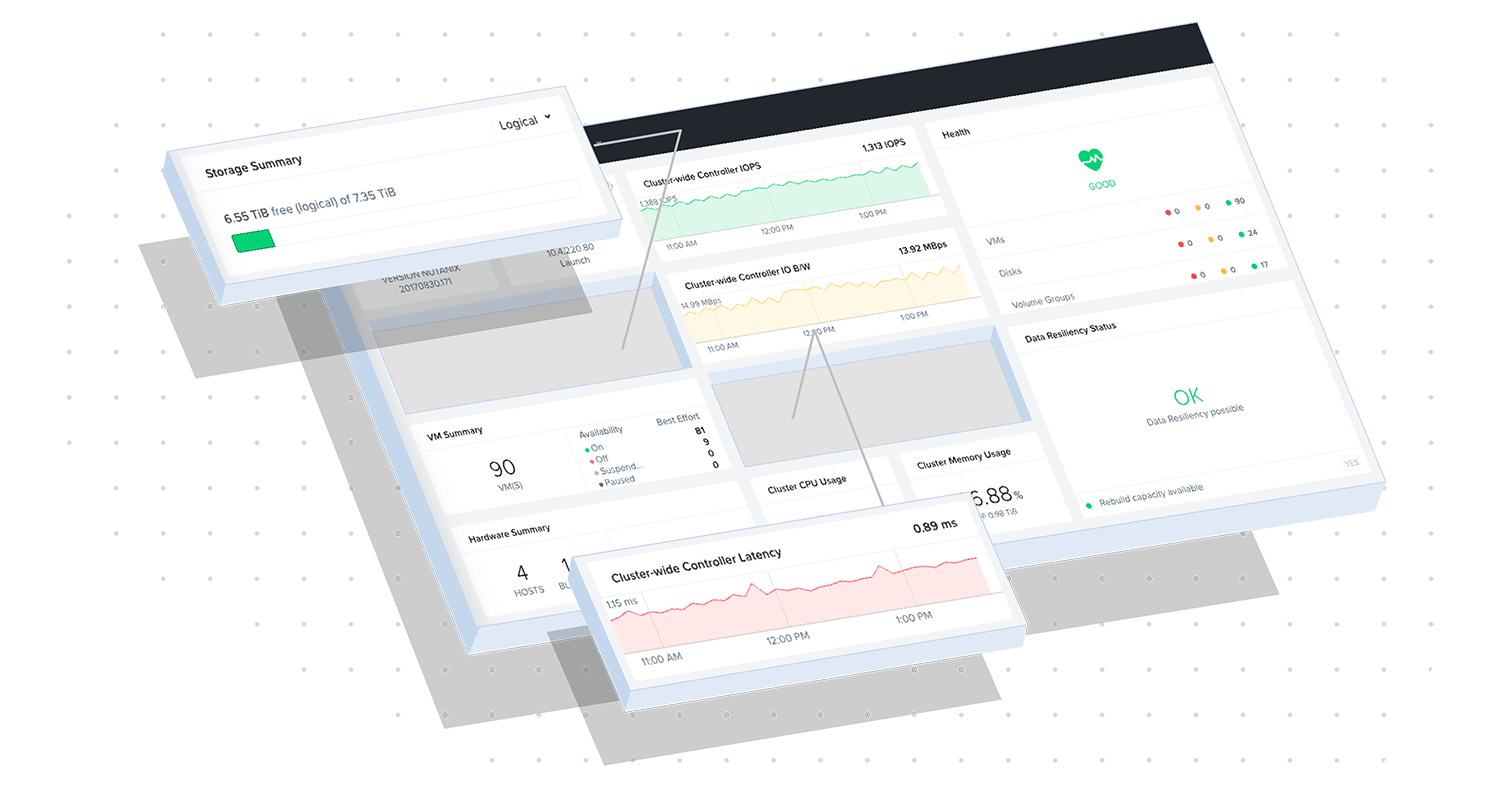

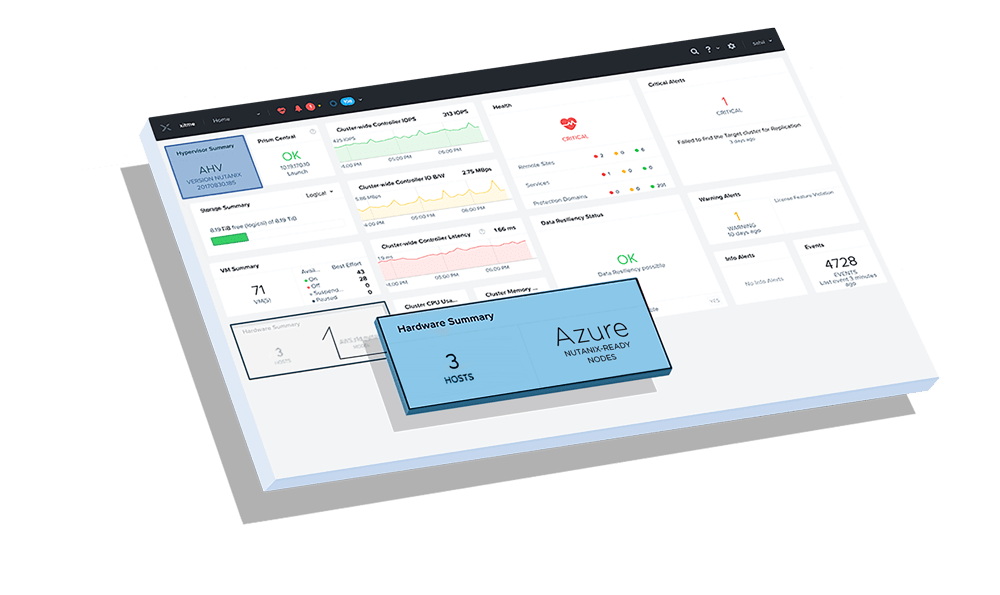

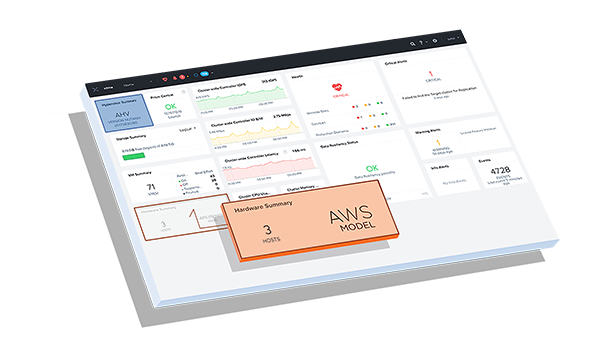

Deploy our Nutanix Cloud Platform within one hour and scale your infrastructure in minutes at the edge, datacenter, colocation facility, and public cloud – including AWS and Azure – without disruption. Manage diverse IT environments as a unified whole, using a centralized management dashboard that leverages intelligent AI-driven automation. Apps and workloads move easily between environments, delivering agility to meet evolving priorities and mandates.

Advance quickly and successfully during your IT modernization journey

Deploy our Nutanix Cloud Platform within one hour and scale your infrastructure in minutes at the edge, datacenter, colocation facility, and public cloud – including AWS and Azure – without disruption. Manage diverse IT environments as a unified whole, using a centralized management dashboard that leverages intelligent AI-driven automation. Apps and workloads move easily between environments, delivering agility to meet evolving priorities and mandates.

Modernize on-premises

- Eliminate IT silos: Compute, storage, networking

- Datacenter consolidation

- Run any workload

- Unify management and control

- Effortless self-service and automation

- One-click private cloud

Extend to edge and cloud

- Fastest path to hybrid cloud

- Extend, burst and migrate apps and data at scale in any cloud

- Run it all with unified management

- Data gravity – secure, move and use data where needed

- Certified ruggedized systems (C4ISR | milspec) with partners

Be cloud smart

- Unify private and public clouds

- Manage datacenters, clouds and edge as one entity

- Fully portable workloads and licenses

- Native storage snapshots can be moved anywhere

- Unify storage for easy data management

Built-in platform security

- Ships pre-STIG'd - hardened by default

- Automated configuration and remediation

- Aligns to Zero Trust Architecture

- Vetted and approved for CISA's CDM and DoDIN APL

Spotlight on customer references

"Nutanix has put us firmly back in the driving seat, meeting all the scalability, resilience and easy management requirements of what was a major change of direction for the DWP. Beyond that, Nutanix has impressed us with the completeness of its solution, high levels of service and support and its vision for an agile multicloud future towards which we’re already moving.”

- Jamie Faram, Head of Hybrid Cloud Services Operations, Department for Work and Pensions

“With Nutanix, we have gained workload portability for critical water management systems, rapid provisioning, and simplified administration for multiple environments to help deliver better services to our customers.”

- Ian Robinson, Chief Information Officer, WaterNSW

“With the Nutanix platform at the heart of our IT infrastructure, we now have the scalability, high- performance platform and capabilities to execute larger workflows systematically. Also, the agility and the flexibility of the technology is what we found the most outstanding. This combination will increase the quality of public service with the aim of increasing economic growth.”

- Asmawa Tosepu, Head of Data and Information Center, Ministry of Home Affairs, Republic of Indonesia

Strengthen your security posture

Protect your data and applications

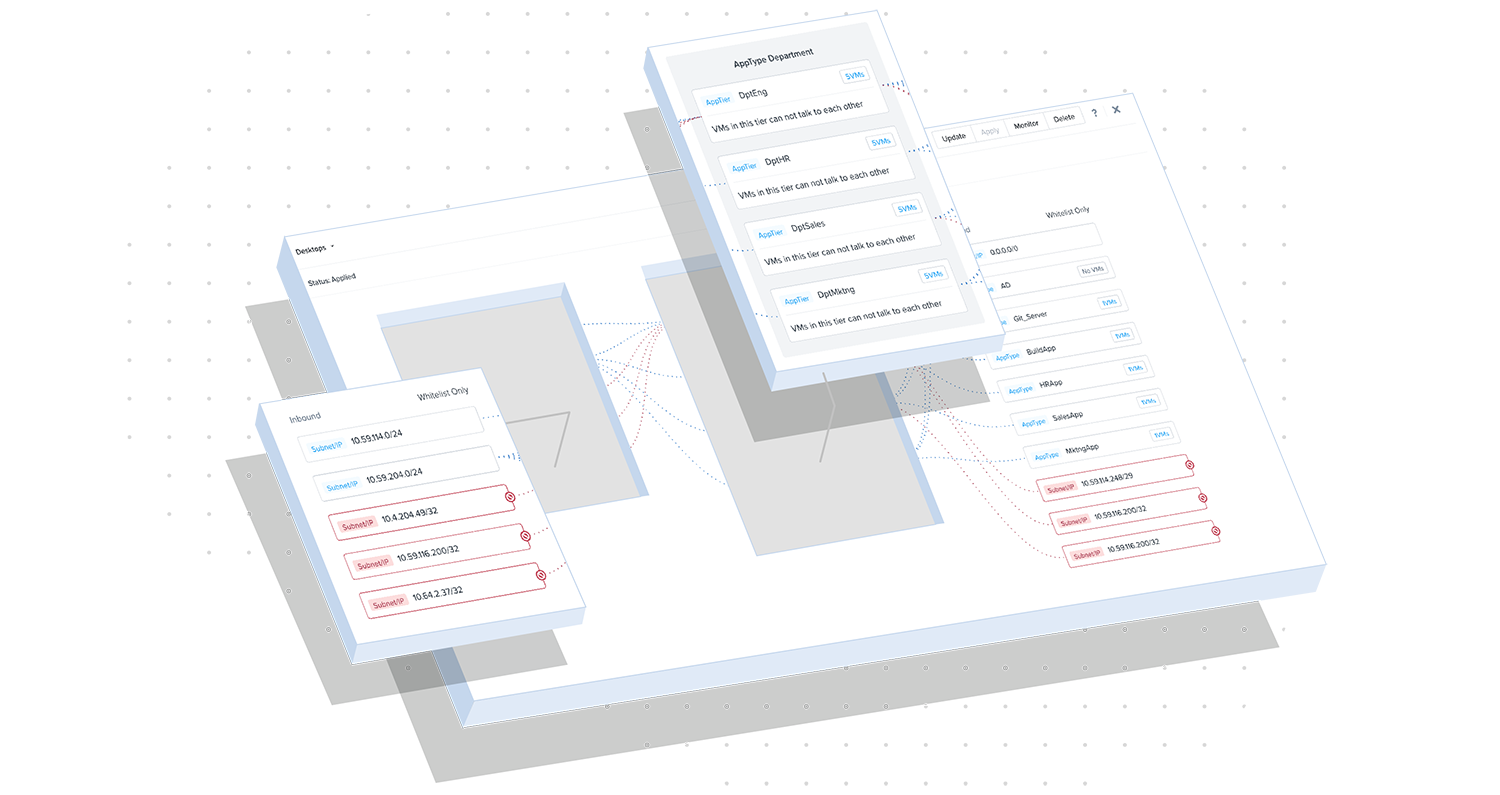

Nutanix provides native platform security, including app-centric security via micro-segmentation, RBAC, native storage snapshots, data-at-rest and data-in-transit encryption, and immutable S3-compatible WORM storage.

Accelerate your alignment to a Zero Trust Architecture (ZTA)

Nutanix can facilitate an easier transition to a ZTA future by providing the necessary foundation on which government agencies can build their Hybrid Multicloud Infrastructure.

The Nutanix AOS 5.20 STIG

Automate security hardening of your Nutanix systems to protect your vital infrastructure from possible penetration or compromise.

Enable the new hybrid government workforce

The new normal: employees working from anywhere

Enable remote workers to securely access critical applications, instructional videos, and data on any device via a browser. Nutanix solutions include Citrix Virtual Apps and Desktops on Nutanix AHV, Microsoft Hyper-V and VMware VSphere.

Partner eco-system includes

Red Hat

Two steps to hybrid cloud for government: How Nutanix and Red Hat simplify IT operations and modernize applications

Citrix

Citrix DaaS on Nutanix delivers seamless access to apps, virtual desktops, and protected data—from any cloud, on any device, in any location, at any scale

AWS

Extend your private cloud into AWS GovCloud with Nutanix NC2

Splunk

Nutanix provides simplicity, stability, and scalability for Splunk deployments. Focus on data analytics and insights, not the infrastructure.

Compliance and certifications

Nutanix has obtained numerous global certifications and continue to expand these efforts in order to help you maintain compliance in all areas of operation.

Featured resources

Nutanix Federal

Innovation Lab

A dedicated space available to customers and partners to develop and test proof of concepts focused on advancing their digital transformation.

5th Annual Nutanix Enterprise Cloud Index for U.S. Federal

U.S. Agencies lead in deploying mixed IT environments. Read the report for more findings!

5th Annual Enterprise Cloud Index Report for Public Sector

Government organizations are planning a 5x increase in hybrid multicloud adoption within the next 3 years. Read the report for more findings!

Nutanix U.S. Federal Support Service

Delivering best-in-class support services 24 x 7 x 365 provided by U.S. citizen engineers in U.S.-based support centers.

Carahsoft

Email: nutanix@carahsoft.com

GSA Schedule: GS-35F-0119YSEWP

• Group A Small: NNG15SC03B

• Group D Other Than Small: NNG15SC27B

immixGroup

Email: nutanixteam@immixgroup.com

GSA Schedule - GS-35F-0511T

Take a test drive!

Build your clouds your way in a few clicks. Instantly complete all your IT tasks on a unified cloud platform with Nutanix. Try it today.