It wouldn’t be an exaggeration to state that data drives technology – and by extension, business – today. Data is the lifeblood of digital transformation and fundamental changes to business models that are taking place in every industry and vertical. This is especially true in the wake of the COVID-19 pandemic – the number of ways people access and use data for work has increased manifold.

According to an oft-referenced IDC study, there will be more than two and half times the data in 2025 as there is today – and about 30% of this data will be real-time.

Needless to say, organizations and IT teams are staring at a tremendous challenge in addressing data storage and transfer challenges such as siloes and inaccessibility. With thousands of users and devices creating millions of files every day, system admins and application developers find it nearly impossible to handle the ginormous amounts of (big) data generated with legacy storage infrastructure.

In addition to complexity, IT teams are also bogged down by ever-changing regulations governing the storage and use of data in different contexts. IT admins and architects find themselves hard-pressed to balance functional, technological, competitive and regulatory needs when it comes to managing and using data strategically.

So, it is necessary to examine the changing nature of data and understand fully the challenges it presents before looking at the technology-driven storage and access options.

The Challenge of Unstructured Data

More organizations (and not just enterprises) currently have some sort of digital transformation initiative in place. The result is an explosion of data generated not just by users, but also by AI-based algorithms, analytics tools, IoT devices, machines at the edge, and APIs.

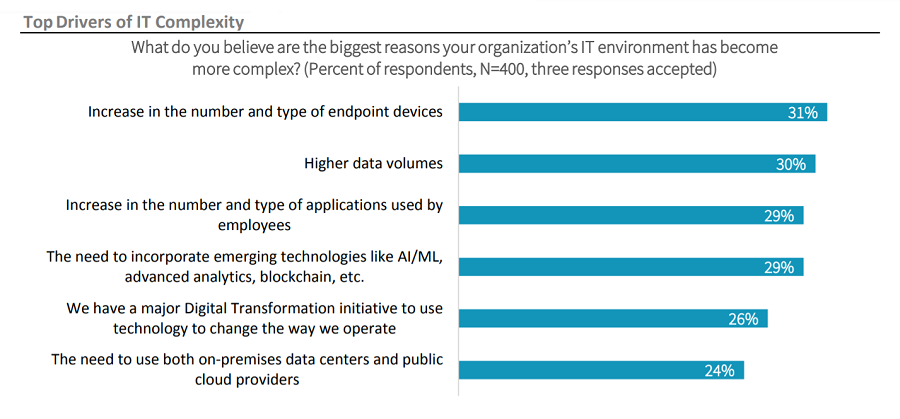

Increase in the volume of data generated and IT complexity go hand in hand. Endless data creation by an increasing number of users, applications and devices is one of the leading causes (and outcomes) of complexity.

That’s not all. The very nature of data is changing. About 80 to 90 percent of all data generated today is unstructured, i.e. raw and unorganized. This includes documents, emails, text snippets, photos, survey responses, infographics, call transcripts, edited videos, platform-specific posts, machine logs, etc. – generated by social media, instant messengers, and a host of different applications and web services.

The trouble with unstructured data is that unlike structured data, it doesn’t conform to conventional data models – it isn’t defined by a schema, it might consist of multiple basic data types, and it is difficult to categorize, store, and manage in a relational database.

No surprise then that fewer than one in five organizations are able to put unstructured data to any good use.

“Because structured data is easier to work with, companies have already been able to do a lot with it. But since most of the world’s data, including most real-time data, is unstructured, an ability to analyze and act on it presents a big opportunity,” said Michael Shulman, Finance Lecturer at MIT Sloan and Head of ML at Kensho (now part of S&P Global).

File-based content made up of unstructured data drives critical functions in pretty much every industry today. Digital media, collaboration, and business intelligence are the major workloads that are influencing these changes.

Businesses need storage software and infrastructure that are flexible and scalable to meet these evolving data needs. At the same time, they must be “smart” enough to simplify management and automate data operations.

In other words, there is an urgent need for organizations to deploy storage architecture that is technologically in step with the pace and complexity of unstructured data, enables them to gain insights and deliver value in different use cases, as well as adhere to security and regulatory guidelines around this data.

Distributed File Systems (DFS) and Object Storage

According to Gartner, enterprises have two interconnected solutions to store, manage, and access unstructured data in a cost-effective way with minimal infrastructure disruptions.

The first is Distributed File Systems (DFS), which Gartner defines like this:

“Uses a single parallel file system to cluster multiple storage nodes together, presenting a single namespace and a storage pool to provide high-bandwidth data access for multiple hosts in parallel. Data and metadata are distributed over multiple nodes in the cluster to deliver data availability and resilience in a self-healing manner, and to scale capacity and throughput linearly.”

Don’t go “Duh” yet. Here’s the context:

Traditionally, data is stored as files and blocks on storage devices. Storage, in turn, has always been organized in the way it is accessed. At the individual level, files are stored in a hierarchical fashion (within folders) using popular protocols such as Network File System (NFS) or Server Message Block (SMB), typically on file servers or Network Attached Storage (NAS) devices within data centers. When data is accessed at the “raw” level, it is stored and accessed in blocks using the iSCSI and Fiber Channel protocols, typically on a Storage Attached Network (SAN) device in a data center.

Obviously, these resources have been located close to compute resources to maximize performance and accessibility, reduce latency, and simplify administration.

Coming back to DFS, what Gartner means is, a DFS is a storage system “distributed” over multiple servers and/or locations that allows users and applications to access any file transparently (regardless of its underlying server or location).

DFS brings four types of transparency to data operations:

- Structure transparency – The client has no idea how many servers or storage devices make up the system or where they’re located.

- Access transparency – The client doesn’t know whether they’re accessing a local or a remote copy of the file, or on which device. The file is automatically updated and synced at one or more locations.

- Naming transparency – The file’s name doesn’t give away its location or path, and remains constant when it is transferred.

- Replication transparency – If a file is copied to multiple nodes, all copies and their attributes are hidden from other nodes.

The second storage system is Object Storage, about which Gartner is equally knotty:

“Refers to systems and software that house data in structures called “objects” and serve clients data via RESTful HTTP APIs, such as Amazon Simple Storage Service (S3), which has become the de facto standard for accessing object storage.”

Don’t go “Huh” yet. Here’s the English translation of Gartner’s definition:

With object storage, data is stored, accessed and managed in the form of objects rather than files or blocks. An “object” consists of the data, its metadata and a unique identifier, all of which can be securely accessed through APIs or HTTP/HTTPS.

Instead of the conventional directory and filename structure found in traditional storage architecture, objects are stored and identified using unique IDs. This allows for a flat structure and drastically reduces the amount of overhead and size of metadata needed for storage.

An object can range from a few KB to terabytes in size and a single container can hold billions of objects. Object storage can handle unlimited media files and scale to multiple petabytes without a degradation in performance. As a result, it is the people’s (system admins’ and application developers’) choice of storage solution to handle unstructured data at scale.

Another advantage of object storage architecture is that metadata resides in the object itself. This means IT admins don’t need to build databases to connect or merge metadata with objects. Further, custom metadata can be changed and added over time, making objects searchable in more and easier ways.

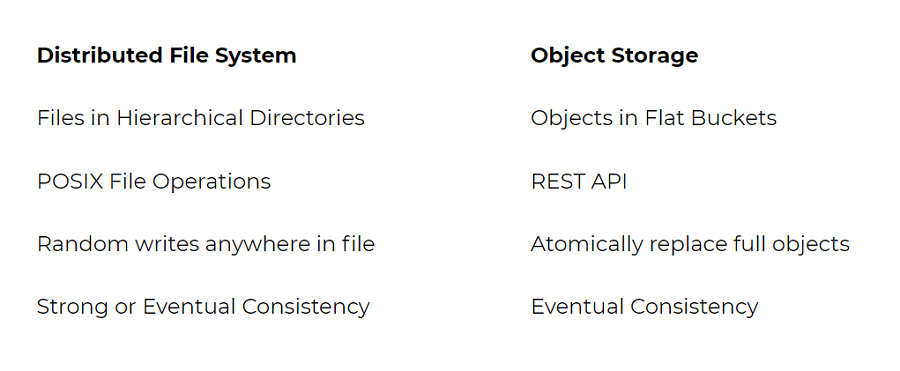

Here’s a quick comparison of DFS and object storage:

Even though there are fundamental differences, both technologies can work in tandem or better than the other for different workloads, business functions, and use cases.

DFS gives applications a wider range of capabilities such as acting as a database backend. It also allows for heavier workloads that need to perform millions of random read/write operations per second.

On the other hand, object storage is ideal for backup and archive workloads because it can handle massive volumes of large files and the TCO is significantly lower than a DFS.

Which is why Gartner has a single Magic Quadrant for both DFS and Object Storage vendors.

Nutanix entered the storage quadrant for the first time in 2021 as a Visionary in the space with its Files and Objects platforms with the aim of simplifying and lowering operating costs. The software-defined storage was designed to help IT leaders modernize and unify their unstructured data storage.

Leveraging the Benefits of DFS

Nutanix Files is a flexible, intelligent scale-out DFS file storage service. It supports SMB and NFS in a Nutanix hyperconverged environment with virtualization and is easily deployed across data centers, remote and branch offices (ROBO), edge locations, and clouds.

Files enables instant upgradating of legacy storage infrastructure with a single click.

Case in point, the University of Reading migrated to the Nutanix infrastructure over a weekend and configured 400TB of storage in ten minutes flat.

“With our legacy storage it would have taken weeks to put in new servers and storage but once the Nutanix nodes were racked, we just hit the expand button and minutes later, it was all done. Why couldn’t we have done it this way before?” Ryan Kennedy, Computing Team Manager at the university, said in a case study.

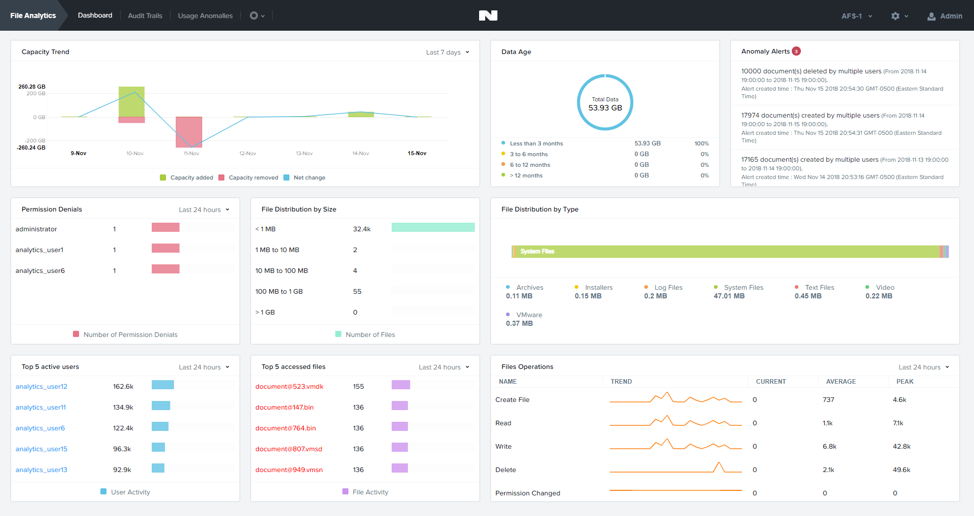

Files is designed to handle thousands of user sessions and billions of files. Admins can scale clusters up (and out) on hardware of their choice by adding more compute and memory to the file server VMs with a single click. They also have visibility into the types and sizes of files being added and modified over time for better capacity management.

Built-in automation and controls let admins track data access and movement throughout the infrastructure, showing who is creating, accessing, moving, deleting and modifying files and permissions in real-time as well as view historical snapshots.

Nutanix Files helps deliver the right balance of capacity and performance to business-critical applications that demand high performance and high availability (in areas such as medical imaging or video surveillance) with enterprise-grade data protection.

Leveraging the Benefits of Object Storage

Nutanix Objects is a flexible, S3-compatible object storage solution that makes cloud data management as well as data virtualization a breeze for enterprises.

A single S3-compatible namespace can scale up to support petabytes of unstructured data. There’s no minimum storage capacity.

Objects can adapt to store workloads, including those that support file, block or VM storage methods.

Replication, encryption, and immutability of data comes out-of-the-box. Admins can enable WORM (Write Once Read Many) policies on any object to ensure data fidelity and data retention compliance. Object versioning is also available for one more level of protection.

Objects can quickly be identified by tagging them on the basis of projects, compliance categories, etc. It’s easy to prevent data from being overwritten by creating copies of objects.

As businesses and ISVs develop cloud-native apps they rely on an object-based, S3 API-centric model for storage provisioning and automation.

Cloudifying Data with a Hyperconverged Infrastructure (HCI)

Companies continue to relentlessly adopt public, private, and hybrid cloud models for their data processing needs. It is safe to say that the cloud has replaced the data center as the data repository of the enterprise. In fact, IDC predicts that half of all data will be stored in the public cloud.

However, most companies seem to be perennially caught in the midst of a constant transition to a better IT infrastructure. They get into a cycle of solving point problems instead of looking at IT architecture change as a whole. As a result, they end up being carted around from one storage silo to another.

HCI serves as the critical in-between stage in this migration by reducing traditional infrastructure stacks down to scalable building blocks, with compute, storage, and networking built in. Nutanix has taken HCI solutions to the next level by making storage services part of the enterprise cloud through simple software enhancements.

The onus remains on organizations to deploy better storage infrastructure that allows them to build better data models to drive business transformation.

Dipti Parmar is a marketing consultant and contributing writer to Nutanix. She’s a columnist for major tech and business publications such as IDG’s CIO.com, Adobe’s CMO.com, Entrepreneur Mag, and Inc. Follow Dipti on Twitter @dipTparmar or connect with her on LinkedIn for little specks of gold-dust-insights.

© 2022 Nutanix, Inc. All rights reserved. For additional legal information, please go here.