The rate of new application development has exploded over the past decade and appears to be accelerating. Some analysts expect to see half of a billion applications by the end of 2023, which is how many were created in the past 40 years. That number is expected to grow by 50% by 2026.

“The data these apps create and use can bring tremendous value to companies,” said Lee Caswell, senior vice president of product and solutions marketing at Nutanix.

"Data insights are more critical than ever in a competitive marketplace."

That can mean customer data, which leads to better experiences or services. Or real-time inventory tracking data that help manage supply chains. Caswell said there are also data from IT systems that help monitor and adjust operations to meet customer and employee needs.

“New technologies make it easier for IT teams to facilitate application developers, who are creating apps aimed at bringing more value to the company,” he said.

“These new apps are usually additive and require consistent access to data, whether that data is stored as files, blocks or objects - and whether it lives in the public cloud, the data center or at the edge,” he said.

He said modern IT systems powered by hyperconverged infrastructure (HCI) software – such as the Nutanix Cloud Platform – are ready for developing, running and scaling up present and future applications. As businesses update existing or onboard new modern applications, this kind of platform allows IT teams to optimize workloads across their data center and public clouds.

As application development “in the cloud, for the cloud” matures, emerging technologies can lead to new revenue streams, according to Sid Nag, research VP at Gartner.

“Driven by the maturation of core cloud services, the focus of differentiation is gradually shifting to capabilities that can disrupt digital businesses and operations in enterprises directly,” Nag said.

“IT leaders who view the cloud as an enabler rather than an end state will be most successful in their digital transformational journeys. Organizations combining cloud with other adjacent, emerging technologies will fare even better.”

The challenge for companies is to find the optimum mix of architecture and technology that takes full advantage of the cloud computing model and enables the design, development and operation of workloads in the cloud.

The combination of cloud-based infrastructure and platforms that allow for the creation of scalable, resilient, automated and observable systems is the basis for cloud-native application development.

Since the cloud itself means different things to different people, organizations define and stick to certain coding fundamentals, best practices, and processes to meet their app development and delivery objectives.

The Cloud Native Computing Foundation and others describe cloud-native technologies innovations that empower organizations to build and run scalable applications in modern, dynamic environments such as public, private and hybrid clouds. Containers, service meshes, microservices, immutable infrastructure and declarative APIs exemplify this approach.

“When people say they want the cloud, what they really want is the ability to flexibly deploy resources and reconfigure them as needed,” Steve McDowell of Moor Insights & Strategy told The Forecast.

“Cloud-native is about packaging, managing and running a workload that is sensitive to its environment.”

However, there are many architecture considerations to make before a company can fully embrace cloud-native application development.

Hybrid Multicloud Architecture

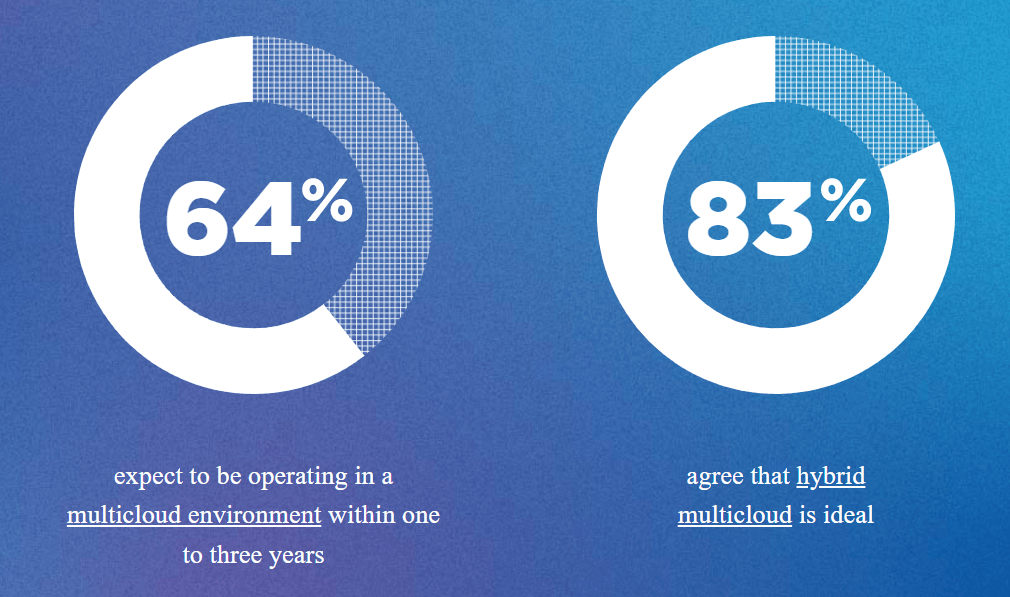

The Enterprise Cloud Index report, in its last two editions, has shown that IT leaders worldwide say a hybrid, multicloud environment is the ideal IT deployment model.

While a third of respondents already have multicloud deployments in place, the rest expect to be there soon.

Source: Nutanix

While interoperability is crucial amongst these multiple cloud environments, the study also found that application mobility is top of mind for organizations. 91% of all companies surveyed said they had moved on or more applications to a new environment – primarily a hybrid multicloud infrastructure – in the last 12 months. Security, performance and control are some of the primary reasons for this shift.

“Enterprise applications and data need to be portable across varying public cloud environments, and also be interoperable with on-premises private clouds,” said Krista Macomber, senior analyst, data protection and multi-cloud data management at Evaluator Group.

Enterprises are more strategic about using IT infrastructure related to application performance and criticality. AI and ML are an integral part of most software today. As the machine does more and the human does less, companies are looking for dynamic infrastructure technologies that bring out the best in both.

In the process, they are moving away from a cloud vs. data center approach and focusing on being “cloud-smart” instead of “cloud-first.” This involves matching every workload to the right cloud environment based on various performance, cost and security considerations.

Serverless Computing

Serverless is a cloud-native application development and service delivery model that allows developers to build and run applications without having anything to do with servers. The servers are still part of the underlying infrastructure, but they’re totally abstracted from the development life cycle.

The key difference between serverless and other cloud delivery models is that the provider manages app scaling as well as provisioning and maintaining the cloud infrastructure necessary for it. Developers are limited to packaging their code in ready-to-deploy containers. All application monitoring and maintenance tasks such as OS management, load balancing, security patching, logging and telemetry are offloaded to the cloud vendor.

Serverless turns current concepts of pay-as-you-go on its head – serverless apps automatically scale up and down as per demand and are metered accordingly using an event-driven execution model. What this means is, when a serverless function is not being used, it isn’t costing the organization anything.

Contrast this with standard cloud computing service models, where clients purchase pre-defined units of application or infrastructure resources from the cloud vendor for a fixed monthly price. The burden of scaling the package up or down rests on the client – the infrastructure necessary to run an application is always active and billed for regardless of whether the app is being used.

Within a serverless architecture, apps are launched only when needed – an event triggers the app code to run and the cloud provider dynamically allocates resources to the app. The client pays only until the code is executing.

It is possible to develop fully or partially serverless apps, according to workload requirements. Overall, serverless apps are leaner, faster and simpler to modify/modernize than their traditional counterparts because they deliver just the precise software functionality needed to complete a workload.

Artificial Intelligence (AI)

AI and the cloud have been evolving and developing in parallel over the course of the last decade. Now the two are so interleaved, it is hard to separate one from the other. From Instagram filters to Google searches, the most common tasks people perform dozens of times a day goes to show how inextricably woven AI is with human life.

Google CEO Sundar Pichai described AI as having an effect “more profound than electricity or fire” on society. No surprise then, that the global AI software market is expected to be worth $850 billion by 2030.

It is the cloud that delivers these AI and ML models to humans and machines alike. Machine learning models and AI-based applications typically need high processing power and bandwidth. A hybrid, multicloud deployment can deliver this much better than other architectures.

Not only can AI algorithms understand language, sentiment, and other core aspects of business-consumer interaction, but they can also facilitate application development and train other AI programs.

Edge Computing

Edge computing has emerged as a perfect complement to IoT and ROBO workloads. Organizations increasingly want a distributed IT architecture that controls and coordinates devices and customer interactions at remote locations – while synchronizing it with data and applications across multiple cloud services and on-prem data centers.

Edge brings the capabilities of cloud computing from the enterprise’s centralized IT setup to wherever their customers or employees are located, according to Gordon Haff, technology evangelist at Red Hat.

“Edge computing is a recognition that enterprise computing is heterogeneous and doesn’t lend itself to limited and simplistic patterns,” Haff said.

With lower data latency, better last mile reach, an ideal mix of physical and digital security and lesser complexity, the edge enables a ton of use cases that need newer and different approaches to application development:

- It vastly improves the performance of AI and ML models by giving them access to sufficient and scalable local storage and compute capabilities.

- It extends the reach of mission-critical applications and workloads to remote locations by processing data locally with containerized code without an internet connection.

- It accelerates augmented reality (AR) and virtual reality apps by processing data instantaneously at the point of collection.

- It enables applications to comply with data privacy regulations and security policies by running them on the approved infrastructure and restricting them to data stored within specific geographic regions and transferred within specified networks.

The edge doesn’t replace the cloud in any way. Instead, it adds scalability and portability to applications, workloads and business cases that stretch the cloud’s abilities beyond its inherent strengths.

Both are good depending on the use case, workload and business need, said Greg White, Nutanix senior director of product solutions marketing at Nutanix.

“Being able to do both without creating separate management, cost structure, and employee knowledge silos is important and valuable,” White said.

He said organizations should strive for an IT infrastructure that is flexible to easily leverage data center, cloud and edge.

“That way they can adapt without ripping out the plumbing every time there’s a new need,” said White.

Dipti Parmar is a marketing consultant and contributing writer to Nutanix. She writes columns on major tech and business publications such as IDG’s CIO.com, CMO.com, Entrepreneur Mag and Inc. Follow her on Twitter @dipTparmar or connect with her on LinkedIn.

© 2022 Nutanix, Inc. All rights reserved. For additional legal information, please go here.