It’s common in the IT business to see timeworn concepts frequently recycled and applied to solve modern problems. It’s not a bad thing; in fact, sometimes history can provide the context we need to understand how the latest IT models work.

Take the much-talked-about IT edge, for example. Today’s edge represents a next-generation shift in where data processing occurs. While processing has already been seeping out of traditional data centers and into regional sites and public cloud infrastructure for some time now, edge computing is pushing it out even farther, to many more distributed, remote locations.

The Fragmenting Datacenter

For many organizations, the traditional datacenter is splintering to allow workloads to run in the place best suited for them, based on such variables as performance, cost, security and management. Those processing locations can be the datacenter, a public cloud infrastructure and, increasingly, small satellite data centers that aggregate data processing from nearby devices. Some workloads simply run best directly within the network-connected machines – Internet of Things (IoT) devices and sensors – and those devices might be anywhere in the world.

Welcome to the modern-day “edge.”

The primary goal of edge computing is to reduce latency in situations where instantaneous application response times can save an organization piles of money, while vastly improving the processes or decision-making and even save lives.

IoT and Industrial IOT (IIOT) devices can be located in planes, driverless cars, agricultural fields, in underwater robots, on oil rigs and a million other places. Many are hard at work collecting data that can be most valuable at the location where it’s created – not after it’s been hauled back over a long-distance network to a corporate datacenter or public cloud, then sliced, diced and evaluated. In today’s world, there are classes of actionable data that age fast.

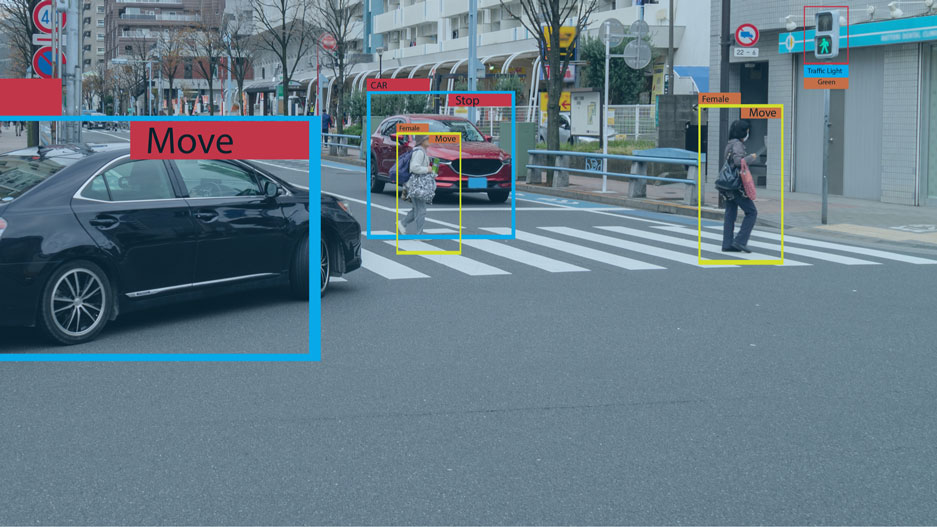

Stale data can have disastrous results. A matter of milliseconds delay can make a difference when a self-driving car needs to detect and avoid a pedestrian, so cars will continue to process the most important data on-board, locally on the device edge.

Similarly when a surveillance system with facial recognition tries to identify a fleeing criminal it may not be desirable to risk a network path traversing multiple networks into and out of the public cloud.

How the Edge Has Evolved

The edge is an IT concept that’s been around for decades. When looking at the big picture, the edge is like an intelligent device (or collection of intelligent devices) closest to one of the following:

- The external routers in your data center (your edge)

- The transition point between communications networks (the network edge)

- A person using the device to get a computational result or consuming content from it (the device edge)

- An unmanned machine (IoT device) using local compute to derive a computational result (device edge)

Between networks – Until recently, the term “edge” was used predominantly in the context of communications networks. In networking parlance, the edge signifies a device—usually a router (a.k.a multiplexers, gateways and other types of gear that may have attached to routers). This equipment linked local corporate networks to a wide-area network (WAN) and/or the Internet.

The WAN edge is and was physically one or more such devices in a datacenter, server room or wiring closet, each with a LAN connection on one side and a WAN connection on the other. When mobile networks took off, the network edge expanded to include anywhere there was a user with a smart mobile device and a network connection.

Between users and networks – In my time as CIO at Pandora, we focused on the edge concept from the perspective of content provider who ran our own content-delivery network, or CDN. As a music streaming and Internet radio service, we cared greatly about quality of service. No one wants to listen to music that’s choppy or has dead spaces while the content stream is buffering. CDNs, like those operated by Akamai, Cloudflare, Fastly, Netflix and others use a number of tricks to accelerate the delivery of music and other media to Internet-connected devices to avoid this type of performance degradation.

A primary technique they use is edge caching, which involves storing replicas of content in multiple servers placed strategically at the edges of the Internet so that they’re closer to users. Because physical distance incurs latency and latency is anathema to real-time content like streaming music feeds, being close to the network edge arose as an important solution for Pandora for shrinking the users’ physical distance to the source of our content. They helped boost performance and, in turn, the all-important customer experience.

Similarly, traditional communications service provider (CSP) networks have edges. Because these edges are critical to CSPs’ ability to deploy services with solid customer experiences, there’s an Open Networking Foundation project called CORD, for Central Office Re-architected as a Datacenter, using virtualization and cloud-native technologies to help the CSP edge similarly deliver high-performing end-user experiences.

Reducing latency and boosting performance may be increasingly important in IoT applications. The speed with which IoT applications can affect a person’s health, safety or decision-making parallels what we accomplished with CDNs and what CSPs are now trying to do with their own networks.

Between IoT Apps and Processors

Real-time IoT applications are cropping up in planes, under the ocean, in autonomous vehicles, in smart utility meters, smart buildings, in Web-connected surveillance cameras on light poles…the list goes on.

Edge computing combines with analytics, artificial intelligence (AI) and automation directly in these devices – or in some cases, in mini-datacenters very nearby – to make data actionable almost instantly. Consider, for example, that financial trading systems, augmented and virtual reality, surveillance cameras, healthcare monitors and industrial robots generate vast amounts of data that are the most valuable at the moment they’re created. Edge computing attempts to make it available right at that moment.

Transporting data over large distances for processing, either to a traditional datacenter or a public cloud, incurs delay that real-time applications can’t tolerate. The analytics and AI can be bundled into the local processing so that the data becomes actionable almost in parallel with it being processed.

There are other, secondary benefits to edge computing in the IoT world. Perhaps most important, local processing filters data, reducing the volumes sent to the cloud or datacenter over a network, helping to ease network bandwidth requirements and cost. It also reduces processing resource requirements and costs in the cloud or datacenter.

That might seem like a minor consideration, if your enterprise is piloting small IoT rollouts. But with a possible 75 billion devices connected to the IoT by 2025, according to Statista. Using public cloud resources for storage and aggregate analyses, the volume and cost will add up fast.

How Enterprises Are Coping

How are IT teams embracing edge computing, from a management and security standpoint? With pockets of processing everywhere and billions of IoT endpoints opening up portals into organizations' networks and IT infrastructure, this could become a thorny task.

In some cases, such as in factory environments, the IIoT devices that need managing and securing may already be local. So they can be controlled by local systems and personnel according to existing policy and procedures.

Beyond that, enterprises will be choosing between traditional data center providers, new entrants and if CORD is successful, new locations that are within telecom companies.

Containers – From a management perspective, smart enterprises are managing the edge around containers. The approach is parallel to what happened when workflows became mobile and there were no longer definable network perimeters. Containers take virtual machines (VMs), which free application software and operating systems from hardware, a step further. They give code just the minimum it needs to run, helping keep ports and libraries you don’t need from being exploited. Many options exist and can be architected along with VMs or Hyperconverged Infrastructure.

Orchestration and integration – In addition, the industry is working to make on-premises, edge, and public cloud environments operate similarly enough that managing the distributed environment looks like a simple extension of your datacenter. Part of these efforts involves developing cloud-native tools that empower companies to build and run highly scalable applications in any cloud or edge environment. Eventually, it should be possible to create centralized policies in a standard way across private clouds, public clouds and edge locations, so that every time a new device is introduced, it doesn’t need to be secured separately, which can introduce the potential for error.

While all this integration work is not yet complete, significant progress is expected this year. With some hard work and a bit of luck, this orchestration will be available by the time large-scale IoT and edge computing implementations are ready in most enterprises.

This article from Steve Ginsberg, a CIO Technology Analyst at GigaOM, first appeared in Next Magazine, Issue 6.

Ken Kaplan is Editor in Chief for The Forecast by Nutanix. Find him on Twitter @kenekaplan.

© 2019 Nutanix, Inc. All rights reserved. For additional legal information, please go here.