The enterprise today is an unfathomable mix of public, private, and hybrid multicloud systems, in addition to complex, in-house datacenters that even experienced CTOs struggle to make sense of.

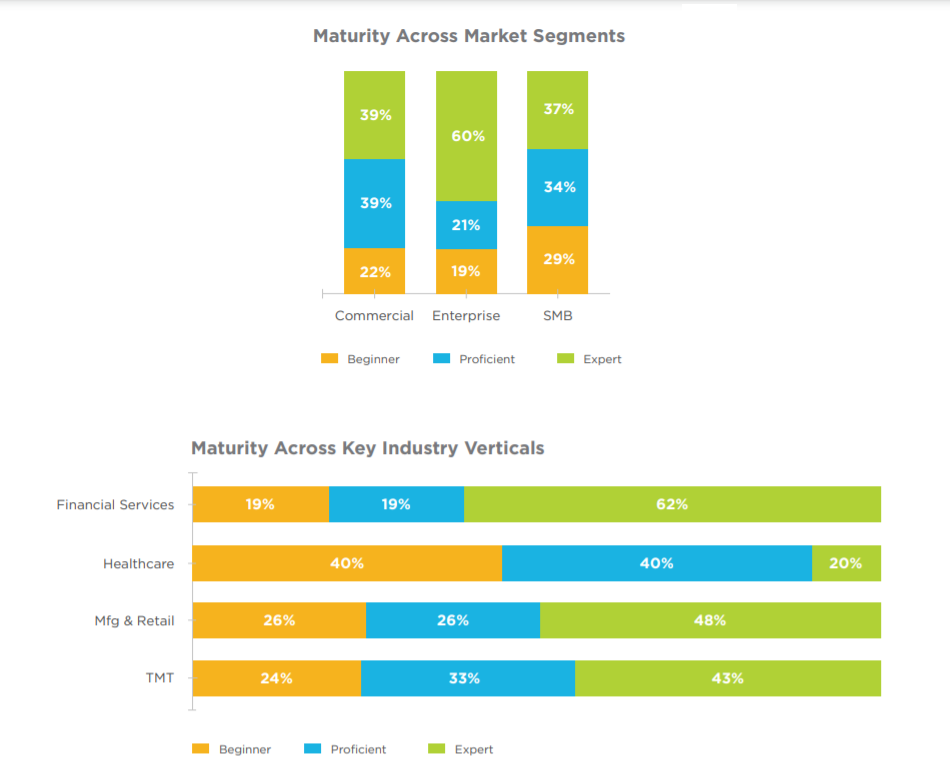

In fact, the Nutanix Cloud Usage Report 2020 found that 80% organizations – be they in the commercial, SMB, or enterprise category – claim proficiency or expertise in cloud management.

The lack of standardization in both cloud computing and datacenter hardware gives rise to vendor lock-in, which is a situation where an organization is stuck with a particular technology because it is proprietary and the devices and software only work with those provided by the same vendor. A relatable example is the way Apple locked early customers into using the iPod by allowing music purchased via iTunes to be played only on the iPod or iPhone.

While iTunes is a relatively small, individual consideration, there are a lot of factors that go into the decision of choosing or switching to a particular IT infrastructure – from the vendor-management perspective – and each of them warrant closer examination.

The Implications of Vendor Lock-In

Vendor lock-in leads to an unsustainable status quo where the company is probably losing money and staff aren’t performing to their full capabilities or potential because of lack of optimal resources. However, they are hesitant to change the technology in question because the cost of switching to a different provider or the level of interruption to business and operations is too high.

Before cloud computing became commonplace, on-premises equipment and datacenters required huge CAPEX and OPEX investments that were beyond the means of SMBs. Vendors such as IBM, Oracle, HP, and Cisco locked enterprise customers into 5-year or even 10-year hardware and software contracts, promising bigger discounts. However, they used this buying power to simply sell more of their products down the line. Understandably, there is a deep rooted mistrust and concern in large organizations on predatory vendor behaviour.

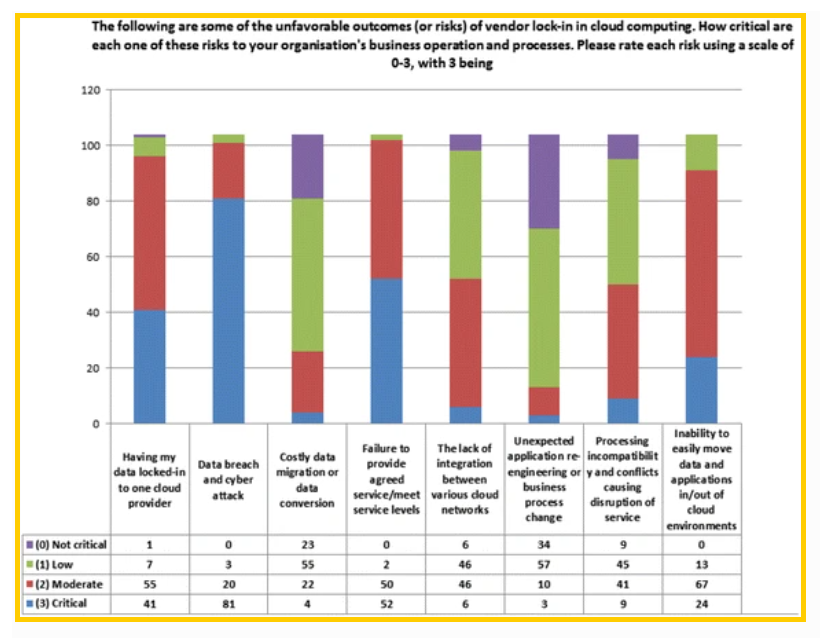

A study by the Journal of Cloud Computing in the UK found a high level of caution in businesses considering the risks of vendor lock-in against the decision to migrate/adopt cloud services, with data lock-in and security breaches topping the list of concerns.

Growing organizations and startups are vulnerable to vendor lock-in; they might have opted for a one-size-fits-all public cloud solution when in the early days and grown too big for it. However, they might have come to over-rely on the services or infrastructure provided by that vendor and the cost of switching might be too high for them.

How to Avoid Vendor Lock-In

There are quite a few simple but effective steps to take that are essential to ensure that a cloud migration or a datacenter upgrade doesn’t introduce any risks, leave open any vulnerabilities, or limit the organization’s capabilities in significant ways.

Understand the infrastructure (and the options). Due diligence is the first and involuntary step that every organization looking to avoid a bad case of vendor lock-in must undertake. A thorough audit of the on-premises hardware and cloud software in use is a good way to start.

Then, move on to an understanding of the business and operational requirements? What is the goal behind migrating to this particular cloud or upgrading to that hardware? What compute, storage, or networking resources are needed? What processes are expected to change and how? Which would be better – a public, private, or hybrid cloud environment? What components would each have? What workloads will continue to be limited by legacy technologies and infrastructure?

An in-depth understanding of the organization’s capabilities, workloads, and legal and regulatory limitations is essential in choosing a vendor who’d be a good fit for a particular solution.

CTOs and architects also need to know the ins-and-outs of the cloud market, datacenter solutions, convergence and hyperconvergence, the business models of various vendors, their track record and reputation, as well as the future of cloud services and trends in data management.

Read the fine print. The simplest way to avoid vendor lock-in is to choose vendors wisely at the outset. Proactively take note of the vendor’s policies and note all the tools, features, APIs, and management interfaces that they provide. Know the industry standards their solutions support – such as the Cloud Data Management Interface (CDMI) – as well as the ones they plan to support in future.

Further, when moving data from one provider to another, there are additional complexities and regulations might be involved. Data that is formatted for storage in a certain provider’s solution might not be compatible with the new vendor’s ecosystem and so might be needed to be modified or transferred in accordance with regulations.

Negotiate entry and exit strategies. Organizations can take advantage of vendors’ propensity to offer free deployment and extra support or warranty for an extended duration. These days, it is easier to switch clouds and even on-premises infrastructure with a lift and shift strategy. However, this shouldn’t sway the decision, which should strictly be based on capability improvement and cost optimization.

Once a vendors get wind that a customer is consider switching to another provider, they might turn uncooperative or even hostile. To avoid this, it is essential to include a clause for deconversion assistance into the SLA at the outset. This clarifies responsibilities and actions on the both sides – company and vendor.

Carefully examine and don’t hesitate to get the vendor’s exit terms on data and application migration as well as legal and financial closure. IT teams would do well to estimate the cost of migration to another vendor in terms of both time and money as well as put in place a migration strategy.

Finally, there should be a designated plan of action to follow when cloud and datacenter management contracts are coming to an end. Watch out for auto-renewals in the contracts and get them disabled. Also build secondary business relationships with competing vendors or MSPs so that there’s always backup available.

Choose open source software where sufficient. Many proprietary software vendors have predatory pricing and licensing models, which are subject to change at their whims. When the solution combines hardware and software, the problem is exacerbated. Free open-source OS and middleware are good enough for many scenarios, although it is an “open” question whether the top-layer applications will suit the architecture and workloads.

For this to happen, the company’s IT workloads must also support non-proprietary alternatives. If these are itself locked-in with features, configurations, and APIs of proprietary technologies, vendor lock-in is inevitable. Failing to support open standards frequently results in heavy customizations down the line.

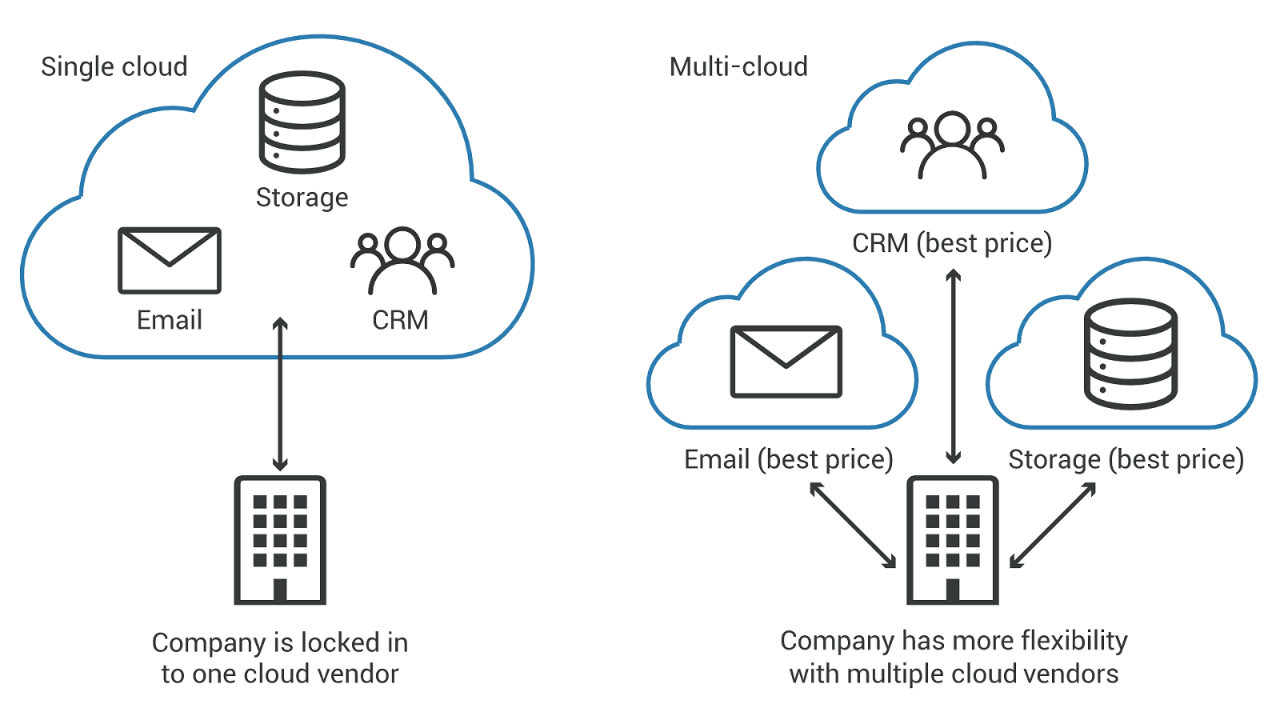

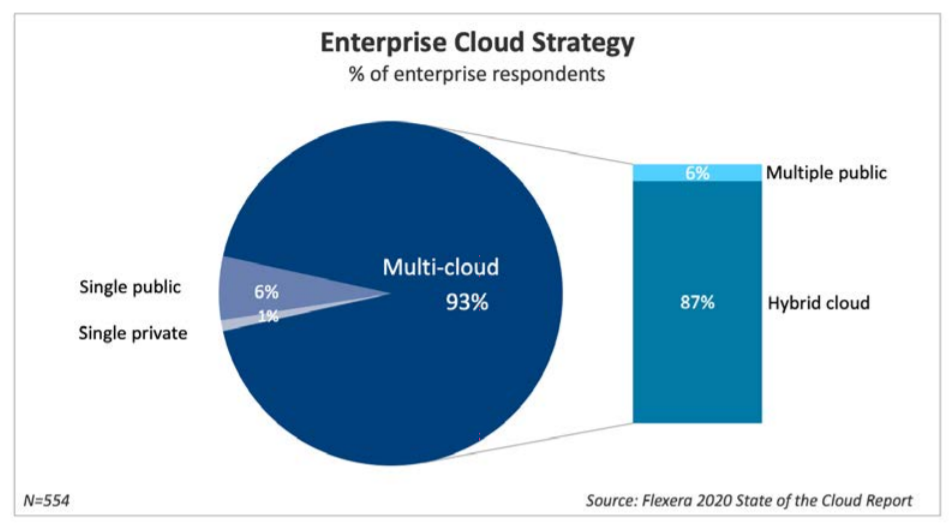

Build a hybrid, multicloud environment. With the availability of reliable public cloud providers and MSPs today, most companies already have a multicloud

An integrated hybrid and multicloud environment reduces dependence on a single vendor, especially as workloads are distributed independently of any single infrastructure. A multicloud architecture forces the organization to proactively implement cost savings processes, and use resources and applications that avoid vendor lock-in.

Again, the hybrid cloud builds in flexibility when it comes to selecting applications and services. In a public cloud environment, there is a possibility the provider might experience downtime or a security breach, or simply change their policies and offerings to reflect the market, leaving customers with little options than to go along.

In a hybrid cloud environment, critical data under the organization’s control at all times. Data can be stored locally or on-premises – either in physical or virtualized form – and only moved to the public cloud when it needs to be used by a particular application, denying the cloud provider or app developer exclusive control.

Maximize portability of data and applications. The ability of data to move from one environment to another is paramount in cloud as well as datacenter environments. Vendors need to define their data models in such a way as to be usable across platforms.

Open source standards come in handy here. CDMI sets guidelines for creation, retrieval, updating, and deletion of data elements from the cloud, while the Open Data Element Framework defines the categorization, indexing, and documentation of data in any IT infrastructure. While not all vendors choose to apply these standards, organizations can seek out those that do.

IT teams should insist that vendors describe their data models as clearly as possible and use the appropriate schema and meta data to make sure everything is computer- as well as human-readable.

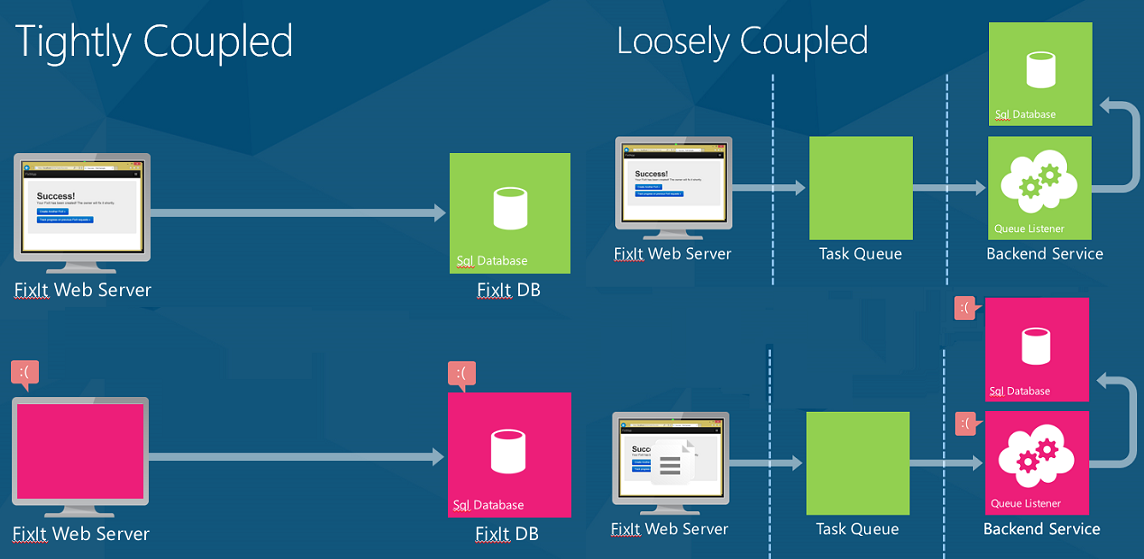

Applications operating on IaaS and PaaS deployments need to be easily decoupled from the underlying infrastructure or cloud platforms. Cloud application components and the application components that interact with them can be “loosely linked” by incorporating REST APIs with popular industry standards like HTTP, JSON, and OAuth to abstract them from the underlying proprietary infrastructure.

Finally, the Cloud Service Provider (CSP) or the vendor that manages the datacenter should define a way to extract data quickly, easily, and economically. Data lock-in is a huge risk to mitigate; these steps simplify and enable immediate data transfer to alternate platforms when necessary.

Stick to SDLC best practices. DevOps is the high point of present-day software development life cycles. It focuses on platform-independence in both cloud and on-premises deployments. For example, Infrastructure as Code (IaC) is a common DevOps practice that aims for technology-independent design with guidelines for provisioning, managing, and operating IT infrastructure.

DevOps helps maximize code portability by enabling deployment of applications to diverse environments by isolating software from its existing environment and abstracting dependencies away from the solution provider.

Is Vendor Lock-In Always Bad?

While no organization wants to be in a monogamous vendor marriage without reason, there is such a thing as a win-win relationship with vendors. Today, the typical organization wants to use standardized or even commodity software, but create sophisticated aggregations and “stacks” of software components, and build a bespoke solution that enables them to deliver a differentiated offering to their customers.

IT organizations tend to think they’re on top of everyday operational processes like managing data, installing additional software components, applying patches and upgrades on time, and scaling functionality while ensuring availability and performance, is within their capabilities. This couldn’t be further from the truth. There is too much of data, infrastructure, and human resources risk involved, and these problems rear their ugly heads every day – application loads exceed system capacity, new APIs need require newer data formats, lack of redundancy causes transaction failures, and so on.

Therefore, the choice of technology vendors becomes a multifaceted decision involving features, cost, scalability, support, and future viability of the solution. This is better understood by traditional datacenter managers more than cloud architects.

“I don’t think datacenter managers necessarily ‘hate’ vendor lock-in. In the absence of an open market they will select the solution that best meets their needs. The possibility of vendor lock-in can be seen as a necessary price to pay to get what you want in the near term,” said John Sloan, analyst for the Info-Tech Research Group.

IT needs to focus on developing and deploying next-generation applications instead of upgrading the infrastructure or migrating to a better cloud. While there are many commonalities between different CSPs, especially in the public cloud ecosystem (such as VMs, block storage, and Hadoop services), the underlying architecture that runs critical workloads differs drastically from one provider to another.

The added complexity of multicloud environments as well as open source software components doesn’t always justify the singular purpose of avoiding vendor lock-in. Many cloud vendors have unique and powerful capabilities that enhance the organization’s ability to deliver better services to their customers. Thus, IT needs to change its antiquated view that vendor lock-in is an evil that needs to be avoided at all costs. It is only one factor to assess when evaluating technology and operational strategy.

A very strong reason to work with a single cloud service provider is that usually, it’s far easier to migrate workloads from one public cloud to another as opposed to migrating to the cloud from a datacenter or upgrading on-premises infrastructure. Moreover, using a single platform for all compatible IT needs allows the organization to get a better deal and gain more leverage in deployment, support, upgrades, and renewals.

No Silver Bullet

There is no magic pill (or even the need for one) to avoid vendor lock-in. Many companies keep shunting between private and public clouds and on-premises infrastructures, only to realize that proprietary technologies offer better performance, tighter security, cost savings, or just simpler operations than a vendor-independent solution. An organization using AWS, for instance, cannot simply wake up one morning and switch to Google Cloud.

However, with some straightforward due diligence, it is quite possible to reach a comfort level in operational flexibility as well as risk mitigation by making sure there’s a fallback vendor available in case of a vendor fallout.

Dipti Parmar is a marketing consultant and contributing writer to Nutanix. She writes columns on major tech and business publications such as IDG’s CIO.com, Adobe’s CMO.com, Entrepreneur Mag, and Inc. Follow her on Twitter @dipTparmar and connect with her on LinkedIn.