Cloud computing keeps the wheels of business turning in today’s technology-based, mobility-dependent economy. In fact, Gartner has named “cloud ubiquity” as one of the trends that are shaping the future of cloud computing.

No wonder global spending on cloud services – including software, hardware and managed services – is projected to surpass $1.3 trillion by 2025, as per an IDC forecast.

“In today's digital-first world, business outcomes and innovation are increasingly tied to the ability to develop and use innovative technologies and services anywhere, as quickly as possible. Cloud is the foundation for meeting this need,” said Rick Villars, Group VP, Worldwide Research at IDC.

At the user-facing level, these “business outcomes” are driven by cloud applications performing at the optimal level. Traditionally, in on-premises environments, application performance depended on resource allocation and optimization. The common questions surrounding application performance were:

Is the code well-written?

Is the database optimized?

Can the application handle high usage loads?

However, cloud and cloud-native applications are altogether different beasts – the infrastructure and architecture considerations for the cloud complicate these questions to a new level. Optimizing cloud app performance requires developers and architects to take a multidimensional approach and closely match cloud resource configurations to the workload in question.

Here’s a look at the steps that IT teams can take to identify and rectify typical issues that plague app performance in the cloud.

Clarify Business Requirements

It always begins with business goals. When organizations are clear about what they want the workload or the application to do, it becomes easier to assess whether the cloud model they’re considering can match performance requirements and standards of availability, reliability, scalability, number of concurrent users, response times, and so on.

Automation, digital transformation, and remote and always-on access to shared data and infrastructure are some of the goals that many organizations are currently working towards in light of recent disruptions.

“The high pace of growth in PaaS, IaaS, and SISaaS, which account for about half of the public cloud services market, reflects the demand for solutions that accelerate and automate the development and delivery of modern applications,” said Lara Greden, Research Director, PaaS at IDC.

“As organizations adopt DevOps approaches and align according to value streams, we are seeing PaaS, IaaS,and SISaaS solutions grow in the range of services and thus value they provide,” she added.

Have a Plan for Deployment

Organizations need to determine if the app they’re considering is truly ready for the cloud. The microservice-based architecture of cloud-native apps allows for continuous deployment and on-demand scaling without the need for oversubscribing capacity. However, apps with a large number of dependencies or cross-app interactions that aren’t localized within the cloud environment are likely to face latency issues.

Deployment teams also need to know exactly what compute, storage and networking resources the application will need. They might consider setting up a small pilot or test project to assess the performance of the workload as well as business outcomes.

“In the coming years, enterprises’ ability to govern a growing portfolio of cloud services will be the foundation for introducing greater automation into business and IT processes while also becoming more digitally resilient,” said Villars.

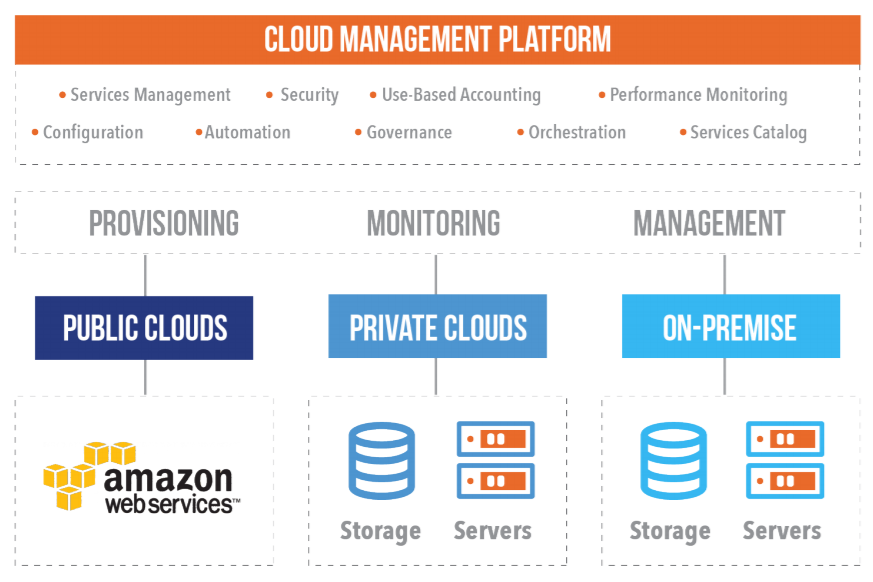

Consider the Nuances of Different Clouds

Traditionally, IT teams have aimed to optimize application performance while maximizing hardware resource utilization across public, private and hybrid cloud. Many times, these two are conflicting goals. And it’s the same with virtualized on-prem or cloud environments.

In private cloud environments, the team that runs IT operations also optimizes the application. So, once performance goals are met, the focus turns to maximizing and reducing resource usage.

In the public cloud, however, resources aren’t a concern. Or, to be precise, the underlying hardware is opaque and inaccessible. Therefore, the organization is left with only two goals: meeting response times and ensuring scalability.

Finally, for applications operating across multiple clouds in a hybrid cloud environment, it necessitates a single, unified view for proactive management. Admins need complete visibility across private and public clouds as well as on-prem data centers to ensure business processes keep running smoothly and user experience remains optimal.

Set Benchmarks and Monitor Application Performance

“Visibility is power,” they say.

Okay, they probably say that about knowledge but given the myriad possible ways in which application performance might be affected, visibility into all layers of the application stack is key to effective troubleshooting and optimization, especially in a multicloud or hybrid cloud environment.

This is where application performance management (APM) comes in. If admins want to optimize or resolve bottlenecks in cloud app performance, they need to identify and track critical metrics governing functionality and usability. These metrics could be related to process-specific transactions, availability, analytics, or logging. Typical examples include:

- Traffic levels: How many users concurrently access the app on average? Is it scalable enough to handle spikes? To what levels and for how long?

- Application errors: How many errors occur? How frequently? What triggers them?

- Resource availability: Are all instances running? Are there any hanging database requests?

- Response times: If response time is slow, is it due to network or bandwidth issues or suboptimal application code?

- User satisfaction: What is the success rate of the business goal or task that the application enables?

“The art is correlating all of those metrics, identifying opportunities to improve performance and then work to address those opportunities,” said Owen Garrett, Head of Products and Community at Deepfence.

A variety of cloud APM software are available that collect these metrics, and store and present them in a historical or real-time format to help correlate information and insights. Cloud APM software need not be installed and managed on-prem within the organizational network or data center, unlike traditional ones – admins can simply use the APM provider cloud instance to configure and monitor cloud apps.

For example, native cloud APMs like Amazon CloudWatch and Google Operations give IT managers, developers and DevOps engineers insights on how their applications are performing on the AWS or Google Cloud infrastructure respectively. These offer deeper traceability and better compatibility with the provider’s ecosystem. However, they might lack visibility into critical metrics and integration with other platforms.

Third-party and standalone APM tools offer advanced reporting features, better visualization and closer integration with apps on other platforms. They are typically delivered via a SaaS model or as managed services.

Cloud APM software typically deals with more dependencies than its traditional counterpart, as different cloud services need to be monitored in different ways. For example, cloud apps running in virtualized instances produce much more log data than serverless functions.

APM software has become a necessity as more enterprises move workloads to the cloud and perform operations and automate processes across distributed computing environments. However, in the cloud model, any issues aren’t resolved from within the APM software. Remediation steps involve tweaking (or even temporarily turning off) cloud services as well as on-prem reconfiguration in the case of private cloud.

Optimize the Cloud Infrastructure

In cloud environments, application performance depends on the underlying cloud architecture, model, and infrastructure just as much as it does on the software stack. Correlating infrastructure resources to the apps that they support and tracking KPIs are prerequisites to improve as well as predict app performance in the cloud.

As more companies move business-critical applications to the cloud, it is imperative to understand the nitty gritty of cloud performance management (CPM), the tools and techniques needed to improve it, and the tradeoffs involved in different scenarios.

For instance, David Lithicum, Chief Cloud Strategy Officer at Deloitte Consulting, told the story of a small Midwestern bank that migrated business-critical applications to cloud-based platforms in the hope of reducing costs and improving performance. To the IT team’s dismay, the apps as well as the bank’s website ran much slower, with employees and customers both complaining that it actually took longer than before to complete transactions.

They later realized that their decision to keep data on-prem wasn’t a good call.

The lesson here is that the entire IT team needs to have a deep, conceptual understanding of cloud architecture, from both the logical and the practical perspectives. Further, even the act of monitoring itself, the tools and agents involved, real-time platform analytics, all use up considerable CPU and I/O resources – the two building blocks of application performance.

There is no single cloud architecture that’s best-suited for application peak performance. However, there are some CPM tweaks and best practices that boost application performance in significant ways.

Select the Right Instances

Cloud vendors offer multiple instance types ideal for different kinds of workloads. VMs are still the most common type of cloud instances, but containers are catching up. Application architects need to match the demands of the workload – be it CPU, GPU, memory or storage – to the instance offered by the cloud service provider.

The goal is to right-size the instance with optimal allocation of hardware resources. If the instance is too big, there is a risk of overprovisioning and bloated costs. If it is too small, the workload might not meet ideal performance thresholds.

Use a Load Balancer

A load balancer routes traffic to the best-performing instances. It also tracks metrics that help identify instances that aren’t performing well. The combination of these two benefits improve the underlying performance and scalability of the application.

“It is the core of the technology that allows you to scale out horizontally,” said Garrett.

“You can add more web servers or app servers; you can move users from one generation of an application to another seamlessly. You can do all of that without changing the public face of your application.”

Enable Eelastic Autoscaling

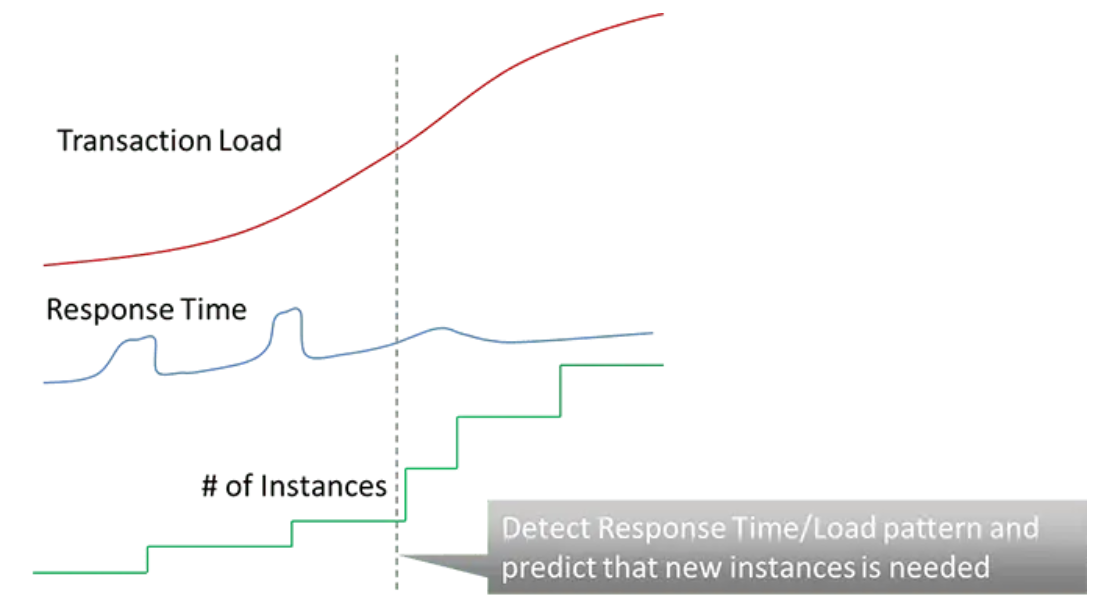

Traditionally, businesses were limited by access to IT resources, so there was little scope for applications to scale dynamically or autonomously. Cloud environments, by their very nature, enable massive horizontal scaling by providing the ability to add or remove instances on-demand.

Developers and admins need to agree upon appropriate rule sets for scaling. The application or APM software can track metrics such as CPU utilization and when the load exceeds a pre-defined utilization threshold, the autoscaling service is triggered. Conversely, when the load drops below the minimum threshold, the process is reversed and unnecessary resources are withdrawn.

Autoscaling works best when it is elastic. Cloud-deployed applications and APM software need real-time knowledge of the load and response times on any given tier. If the response time is negatively impacted by the increase in load, it’s time to scale up that tier. A baselining approach also enables predictive autoscaling based on historical load patterns.

All this fine tuning doesn’t come without associated monitoring, management, and maintenance costs. Lithicum advocates allocating about 15% of the OPEX of private, public or hybrid clouds to CPM operations to keep efficiency standards up.

People Over Clouds

Agility and performance, like every other aspect of technology, are just as much about people as they are about processes. A development team that leverages individual and collaborative efficiencies and embraces best practices spanning DevOps, SecOps and NetOps will succeed at building resilient and reliable cloud applications.

Application developers and architects also need to keep in mind the other – and arguably better – half of the people that matter: the users.

Ruben Spruijt, Sr. Technologist, stresses the importance of paying attention to the needs of the business users of any application and delivering a trustworthy experience that allows them to solve problems on their own.

“IT should focus on balancing user needs with business needs,” Spruijt said.

“If users get better productivity from applications, it will serve the business goals as well. At the end of the day, it is about user experience, making it easy and fun to get work done, attracting new end-users and retaining existing ones.”

Dipti Parmar is a marketing consultant and contributing writer to Nutanix. She’s a columnist for major tech and business publications such as IDG’s CIO.com, Adobe’s CMO.com, Entrepreneur Mag, and Inc. Follow Dipti on Twitter @dipTparmar or connect with her on LinkedIn for little specks of gold-dust-insights.

© 2022 Nutanix, Inc. All rights reserved. For additional legal information, please go here.