“More things need to be atomized,” he told IT experts gathered at .NEXT in London in late 2018. “More things need to be miniaturized. More things need to be dispersed. The one-size-fits-all approach worked fine for the first generation of cloud computing. Now, the cloud must adapt to the different needs of both consumers and creators.”

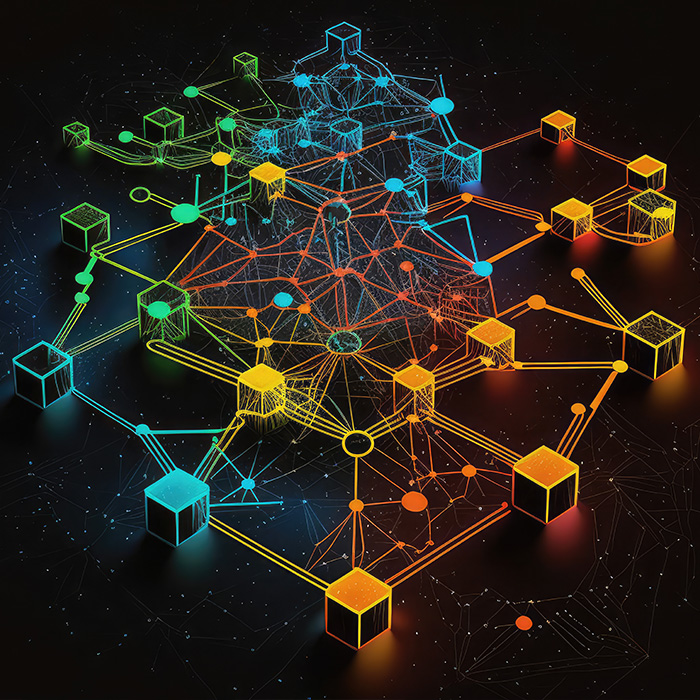

Pandey sees today’s centralized monolithic cloud systems breaking into smaller, decentralized and more dynamic sets of platforms. This will lead to better, more affordable and secure cloud services.

He saw this process played out by previous technologies, when computing evolved from desktops into wearables and from mainframes to serverless networks. He is convinced that public clouds dispersed across different parts of the world will allow companies to more easily abide by local laws and grow.

The Laws of Economics

The law of economics coupled with the rapid pace of technology innovation are driving many to explore the benefits of renting versus owning compute infrastructure. Many turned to public cloud for easy accessibility and because it’s an operational rather than capital cost. But often cost of public cloud have people wondering if it makes sense to run workloads on rented or owned infrastructure, especially as owned private cloud infrastructure is becoming more pervasive and able to interoperate with public cloud. This hybrid approach provides freedom of choice to run workloads in public or one private cloud, wherever performenced needs are met and whichever makes economic sense.

Cloud services will need to adapt, evolve and disperse to democratize cloud computing and make it more affordable to more people around the world, according to Pandey. He said today’s cloud is monolithic and only serves the rented model.

“Today, it has to be a large data center that requires a billion dollars in investment,” he said. “The customer must come to this gigantic, Death Star-like source, and there is no other way to consume it.”

Instead, Pandey sees a future where computing resources are everywhere like “white noise.” Like a constant, accessible hum, technology will be invisible and available virtually anywhere.

To get there, the behemoth cloud services of today will be joined by many smaller clouds that cost a few million rather than billions of dollars to build.

“The whole centralized concept of everything going to a few data centers is just going away, as controlling data that’s spread across the world becomes more complex,” Mirani said.

Instead of building big central data centers, Mirani wants to help cloud service providers build their presence in different countries so they can give customers more control over where data can go.

The Law of Physics

Lastly, the convergent clouds tie into the third tenent of modern computing: the law of physics. If the past is proof, the data demand exceeds the ability of available pipelines. This data gravity is a constant struggle. The demand for more data is unlikely to level off, ever, according to Pandey.

“It’s important to understand that you will always be network-challenged,” he said. “There is always a point where the data will grow at a faster rate than the pipe.” He said the best way to solve that problem is to move the compute to the data rather than the data to the compute.”

In a world increasingly driven by data, the laws of the land, economics and physics make things more difficult and more expensive to manage. But these three laws are also pointing the way to a future where computing becomes more secure, affordable, pervasive, almost invisible and as easy to consume as a breath of fresh air.

Damon Brown is a contributing writer. He writes a daily column for Inc. Follow him on Twitter @browndamon.

© 2019 Nutanix, Inc. All rights reserved. For additional legal information, please go here.