Scaling the infrastructure and capabilities has always been a pressing problem or priority for any IT department worth its salt. As businesses become information-driven, collect, use and generate more data, and use more technology and computing, the resources and computing power they need increases exponentially.

Gartner predicted that by the end of 2020, over 90 percent of organizations will use some sort of a hybrid infrastructure for their IT deployment and services. As businesses grow in size and complexity, they will need an evolving mix of public and private clouds as well as agile, software-defined on-premises systems that unify tracking and control of disparate hardware such as servers, networks and storage arrays.

Virtualization, the technology that promised this utopian unification, somehow didn’t manage to catch on as a simple, cost-effective, and pervasive option in either SMBs or the enterprise. This promise is instead being fulfilled by hyperconvergence, which fulfills the storage, networking, and compute needs of an organization using virtualized servers linked with public or hybrid cloud deployments, with adequate levels of automation and abstraction.

It more than hyperconverging the hardware. It's about hyperconverging IT — the storage and server management coming together.

This transformation from virtualization to hyperconvergence warrants a closer look.

What is Virtualization?

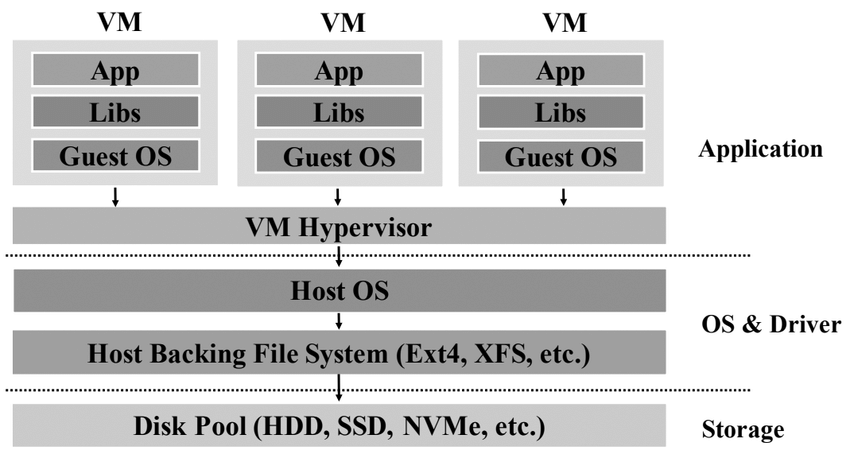

Think Gmail. Where does it exist? In a mobile device? In a laptop? On the internet? The answer is in the cloud. Extend that analogy to the hardware/software system in an organizational environment. Virtualization is a software-based, virtual representation of applications, servers, storage, or networks, where the physical devices are abstracted by software called a hypervisor that runs on top of the operating system and managed via a single user interface. A hypervisor can manage multiple virtualized servers, typically called virtual machines (VMs).

The promise of virtualization was operational efficiency, increased availability of resources, and portability:

- It allowed IT admins to run applications by divvying up existing physical hardware and servers into VMs with their own sets of CPU, memory, and I/O resources.

- VMs can be cloned, replicated, resized, or moved from one server to another. They are hardware-independent.

- A VM is isolated or upgraded as a unit when the server needs maintenance or updates.

- Multiple VMs can be monitored, managed, and troubleshot from a single, centralized administrative interface.

- Desktop and OS virtualization (which are different from each other) allow IT to deploy hundreds of simulated desktop environments, reducing bulk hardware costs.

Virtualization allowed organizations to save on hardware costs by repurposing their existing inventory of desktops and servers into inexpensive “thin clients” or having employees bring their own devices.

The Not-So-Obvious Cons of Virtualization

The ease of data use and availability of resources defeat the purpose of virtualization. In the enterprise at least, where application and data criticality keep increasing, datacenter footprints tend to grow over time.

- Availability of the Virtual Desktop Infrastructure (VDI): Availability assurance turned out to be too big a responsibility for virtualization – instead of saving costs, VDIs simply shifted them. Companies saved money on user workstations, but had to upgrade primary servers, compute power, storage, and network systems to maintain the experience and convenience that users were accustomed to. Significant re-architecting of the infrastructure is also needed in order to handle known and unknown spikes in application or workload usage.

- Control and consistency: Just a decade ago, IT departments used to pride themselves on their ability to make a “golden image” or identical desktop environments available to all users in a specific role. Employees with this standard configuration had similar tasks, responsibilities, and requirements. Today’s workplace is a lot more complex, flexible, and heterogenous. Workers, vendors, and partners use and share a lot of incongruous devices running a variety of apps. These preferences are not up for homogenization or standardization, given the evolving nature of knowledge-based work.

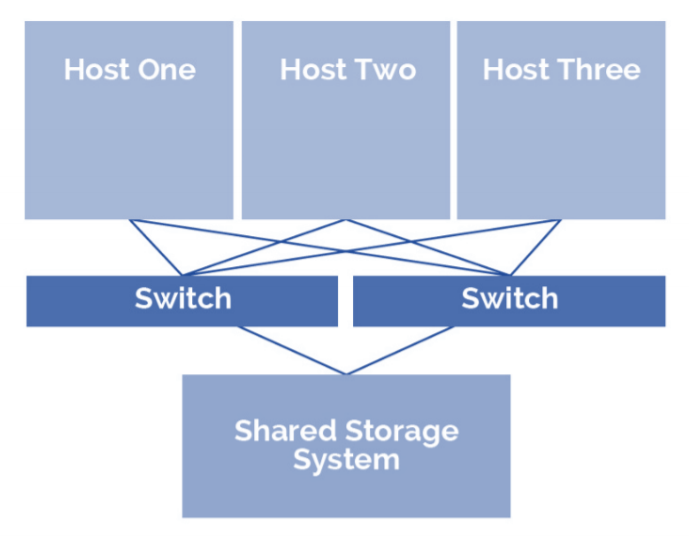

- Failover: As is obvious from the diagram above, when a physical server fails, the VM can be restarted on other hosts. However, all files that users work with reside on shared storage. Thus, many organizations end up with a 3-2-1 design where the shared storage is a central point of failure, unless they implement a redundant SAN or NAS array. These are expensive and complex to maintain, defeating the primary purpose of virtualization.

- The rise and rise of cloud: Organizations across the board are moving to a hybrid cloud model. Virtualization technology developed in an age of on-premises data centers, but modern applications – including everyone’s favorite office suites and email, videoconferencing, productivity, and file storage apps – now run from the public cloud, and today’s users aren’t willing to compromise on this convenience. A partial solution is offered by Desktop-as-a-Service (DaaS), where all the “desktops” a company needs are hosted and managed by a cloud service provider. The DaaS provider provides compute, storage, and network resources, maintains and updates applications, and stores users’ files. However, this cedes control of organizational data and systems uptime to third-party providers.

The potential solution to all these sticky problems that have plagued virtualization for decades is probably hyperconvergence.

What Is Hyperconvergence and How Does It Improve Virtualization?

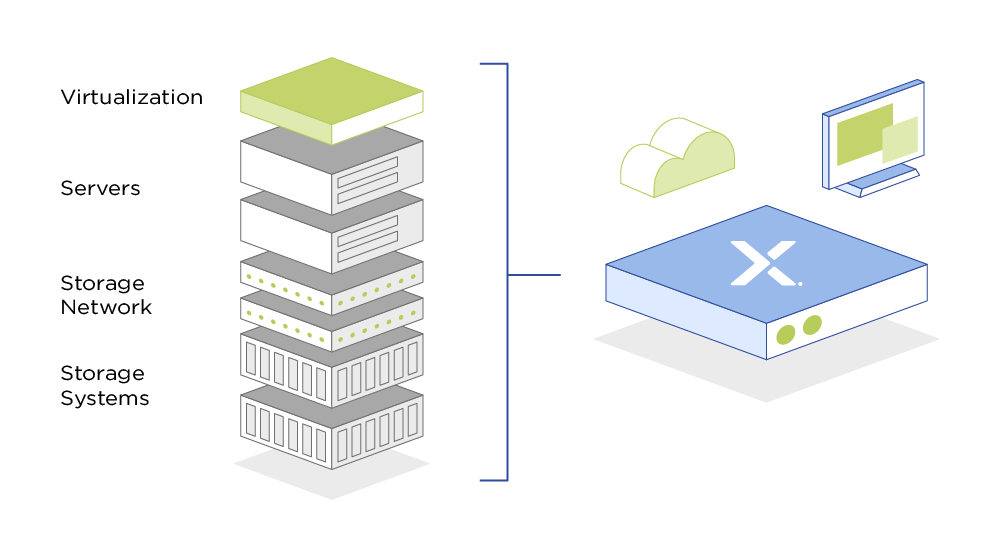

The term hyperconvergence is a compound of hypervisor (the software that manages multiple VMs) and convergence (a preconfigured combination of software and hardware where the compute, storage, and networking components are discrete and scale independently, but management is unified).

A hyperconverged infrastructure (HCI) combines hypervisor, compute, storage and networking in modular building blocks called nodes. It also includes backup, replication, deduplication, and cloud gateway integration. This saves costs in multiple ways – buying HCI appliances with the datacenter components bundled is cheaper than individual modules, and simplifies updates, maintenance, and upgrades.

Hyperconvergence improves upon virtualization in both its purported primary benefits: cost savings and operational efficiency. “Companies are looking to rein in IT costs,” said Scott Lowe, CEO of ActualTech Media. “The hyperconverged infrastructure is simpler to deploy and manage. It’s just much easier than adding all those point solutions to your infrastructure.”

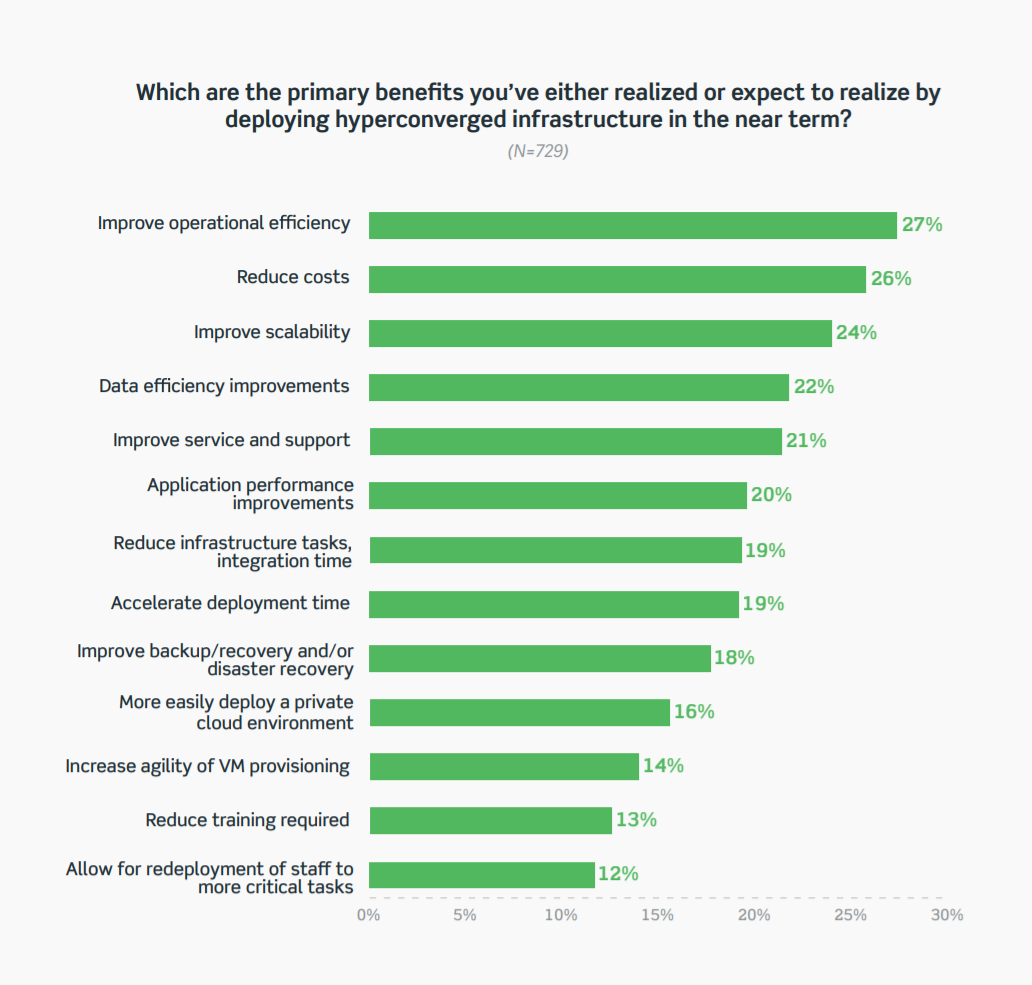

Actual surveys of companies that have deployed HCI confirm this:

Leveraged with virtualization, hyperconvergence offers some specific benefits.

Software-Defined Storage (SDS)

The biggest drawback or concern in traditional virtualization systems – failure of centralized storage – is overcome by one of the core features of an HCI.

Hyperconvergence takes on-premises systems and datacenters back to the direct-attached storage architecture in place of shared storage arrays, but adds distributed SDS technology to the mix. The SDS layer creates an abstraction between the physical storage and the workload that will consume it – in virtualization, this is a VM. Thus, VMs running in a cluster can leverage storage that is local to the node, residing on another node, or remote, without being aware of the actual physical systems involved.

Further, the storage system can be pooled and managed by multiple VDIs. In addition to storage virtualization, an SDS allows for policy-based provisioning, deduplication, replication, snapshotting, and backups.

Efficient Resource Utilization

The hypervisor has evolved into tightly integrated software components make up a hyperconverged platform: storage, compute, networking, and management. The software pools and abstracts underlying resources and dynamically allocates them to applications running in VMs or containers (HCI’s equivalent of isolated workloads).

With traditional virtualization, a workload operation doesn’t use up all the resources made available to the associated VM. These inefficiencies are corrected by containers, which create abstractions at the OS, enabling modular and distinct functions of each app, which can then run using the same physical resources. Consequently, a few servers can run hundreds of workloads with single, centralized management.

Simplifying Complexity

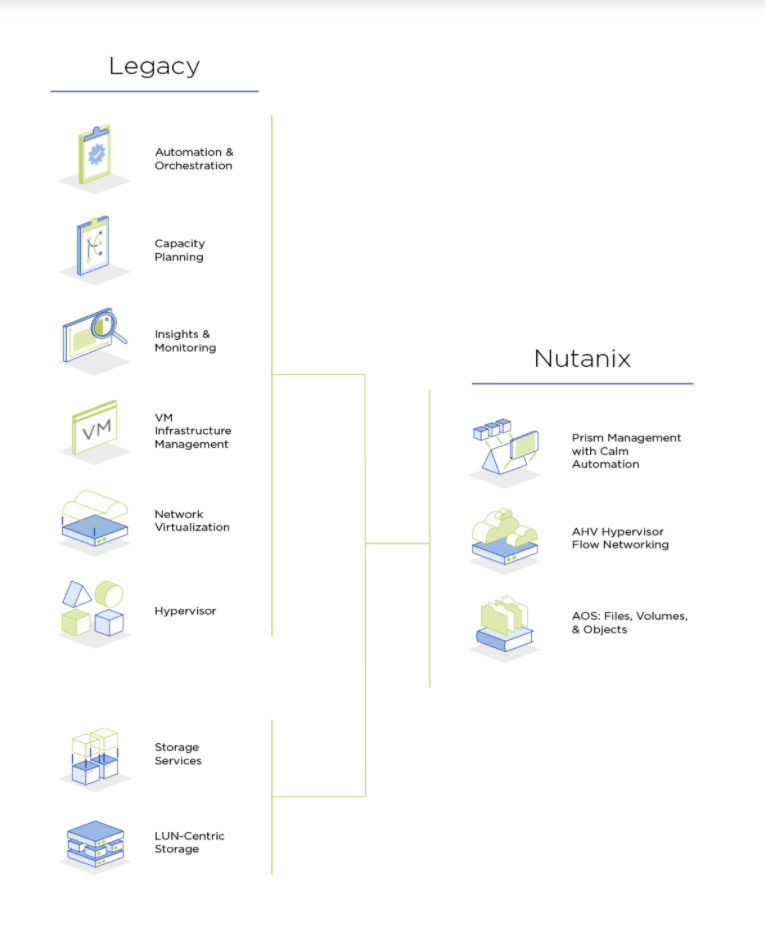

Implementing and managing virtualization come with significant complexities built in. Separate systems for compute, storage, backup and disaster recovery can all converge into an efficient stack – or even a single appliance – in an HCI. Virtualization is part of the native private cloud or hybrid cloud solution offered by many HCI vendors such as Nutanix. Virtual networking, security, automation, and other infrastructure management functions are delivered with one common control plane and interface.

With streamlined common workflows and invisible virtualization, IT admins can boost management efficiency in multiple ways:

- ‘Single pane of glass’ management for storage, compute, and security eliminates downtime and maintenance windows.

- An application-centric view of the network allows monitoring of every node in the cluster, along with details of each VM and app, and their interconnections within physical and virtual networks.

- Role-based access control lets user and group entities perform specific actions on VMs, apps, clusters, reports, and other resources.

- A unified API gateway enables automation of end-to-end workflows across thousands of geographically distributed VMs, removing the need for single-site management.

“Hyperconverged infrastructure remains the primary growth driver in the converged systems market,” said Sebastian Legana, Research Manager at IDC. “Reduced operating complexity, ease of deployment, and excellent fit within hybrid cloud environments continue to drive HCI adoption across a broad range of customers and workloads.”

Data Efficiency and Availability

Hyperconvergence uses a "true” shared resource pool and reclaims overhead that is allocated to each LUN in traditional virtualization implementation. This means administrators can plan capacity and manage I/O throughput much more efficiently. With thin provisioning for all VMs, hyperconvergence makes sure storage capacity is used only when applications need it.

Further, the way data is stored also contributes to data efficiency. In an HCI, new data is striped and mirrored across all disks in a cluster. Multiple copies of the data ensure better read performance as well as availability in the event of a failure, unlike simple virtualization.

Earlier, most organizations didn’t have the budgets to buy fully redundant storage systems. They were already stretched thin by the infrastructure necessary for virtualization in the first place. So, they resorted to the 3-2-1 high-risk design mentioned earlier. An infrastructure upgrade to HCI means organizations have the advantage of built-in, highly available storage – even if a physical server fails, all VMs that run on it are automatically restarted on another hostin the cluster, and no data is lost.

Application-Centric Network Security

It’s not just enough to have a secure platform and infrastructure, it’s also important to focus on the source of business value, that is, the applications and data that an organization uses day in and day out. Virtualization, cloud, and pretty much every IT architecture has security controls that apply in “layers,” with subsequent protections in place if one layer is breached. With hyperconvergence, security is incorporated into the entire infrastructure stack, including storage, virtualization, and management.

Historically, data centers and VDIs have banked on firewalls to provide perimeter-based protection to data and applications in a typically large group of servers. The drawback here is that if an attacker or some malware is able to get past this perimeter, everything inside becomes a target.

Hyperconvergence mitigates this risk by creating granular “security containers” that are only limited by the capacity of legacy network appliances. This “microsegmentation” of security containers enables application level security down to individual VMs and helps prevent the spread of malware from one VM to another. It is also possible to filter and restrict data center traffic based on user roles in the VDI space.

Cloud Operations

The enterprise has historically been plagued by slow, legacy systems that focused on control as opposed to convenience. Virtualization of on-premises infrastructure was no different. Fast forward to the present, everyday collaboration and commonplace tasks warrant some sort of access or data transfer from public cloud services such as Amazon Web Services (AWS), Google Cloud, or Microsoft Azure, and IT teams have no option but to provide the operational efficiency and convenience of these services to employees, customers, and other users.

Hyperconvergence lies at the core of a hybrid cloud or multicloud deployment that bridges the gap between traditional infrastructure and public cloud services. Many organizations have achieved true hybrid cloud capabilities by integrating -as-a-service options from various public cloud ecosystems with a mix of VM and container-based applications across datacenters. The simplicity and flexibility of the HCI is also driving many organizations to move their workloads back from the public cloud back on-premises by deploying private clouds that scale virtualization.

Hyperconvergence Is the New Virtualization

Virtualization has been around in one form or the other since the 1960s, when IBM tried to improve the robustness of its mainframes by developing systems for smart allocation of resources. However, it has failed to take off and achieve its full potential over 50 years on.

Hyperconvergence, on the other hand, has been adopted by over a quarter of IT departments in the US in a relatively short span of time.

It is simplifying and unifying the management and administration of IT infrastructure in the enterprise like never before, while keeping costs and complexity to a bare minimum. It is driving digital transformation in SMBs and large organizations alike. At the end of the day, speed, scale, and resilience matter for any business function that depends on the IT infrastructure, and hyperconvergence is certainly improving all three.

Dipti Parmar is a marketing consultant and contributing writer to Nutanix. She writes columns on major tech and business publications such as IDG’s CIO.com, CMO.com, Entrepreneur Mag and Inc. Follow her on Twitter @dipTparmar or connect with her on LinkedIn.

© 2020 Nutanix, Inc. All rights reserved. For additional legal information, please go here.

Related Articles

Is 2024 a Turning Point for Deep Tech?

From healthcare to energy to climate change, so-called ‘deep tech’ can help solve the world’s biggest challenges.